Convolution Nerual Network Backbone

[mobilenet nasnet alexnet convolution convolution-neural-network cnn densenet deep-learning resnet vgg inception Convolution Nerual Network (CNN) has been used in many visual tasks. You may find the networks for varying types of visual tasks share similar set of feature extraction layer, which is referred as backbone. Researchers typically use backbone which has been succesful in ImageNet competion and combine them with different loss functions to solve different type of visual tasks.

In this note, we will review some of the most popular backbones (order by their published time)

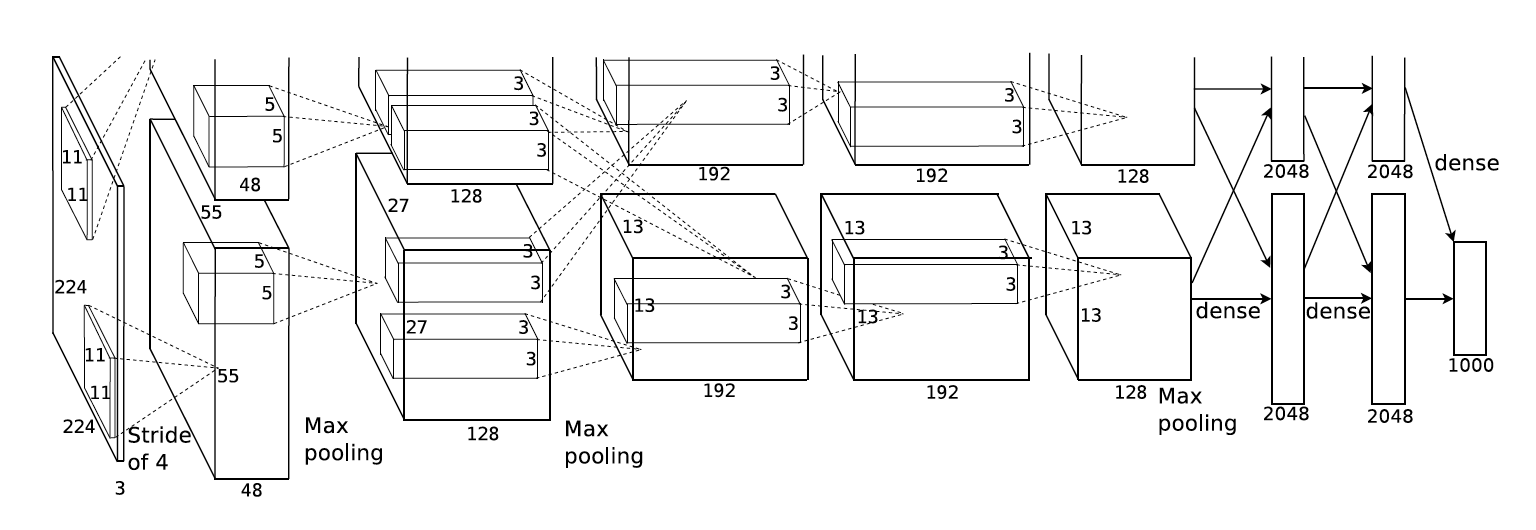

AlexNet

AlexNet (2012) achieves greate success in ImageNet competition. Note since second layer, it split into two groups because of GPU memory limitation (original trained with two GTX 580 with 1.5 GB memory each).

It starts with large kernel size (11x11) with few output channels (96) and changes to small kernel size (3x3) with more output channels (256 or 384).

VGG16/19

VGG16/19 (2014) is much deeper than AlexNet and uses only 3x3 by kernels. You could find VGG16/19 network can be divided into several groups, where each group has the same number of output channels and spatial resolution; between each group a 2x max pooling is applied and also doubles the number of output channels until reached 512.

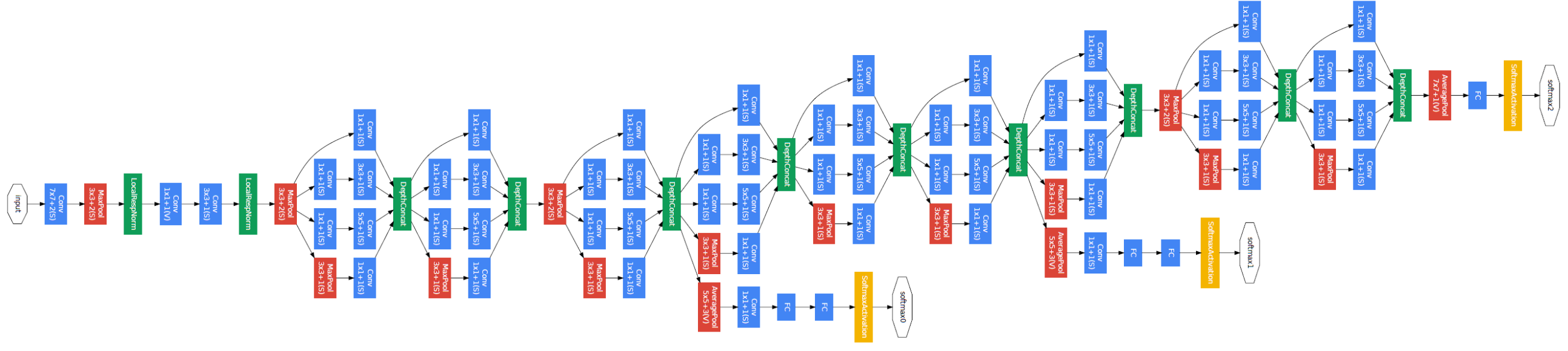

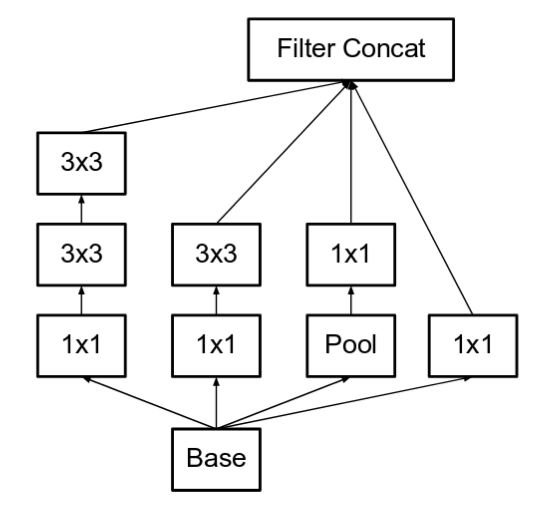

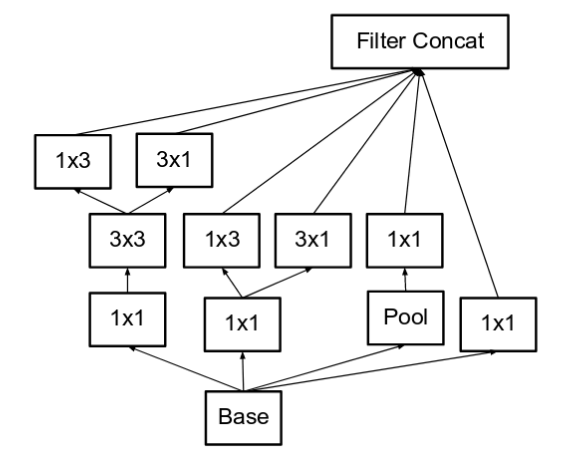

GoogLeNet/Inception V1

GoogLeNet (2015) or Inception V1 explores the idea of network in network and make the network even deeper. It is consisted of Inception blocks (shown below) and outputs of intemediate layers are also considerred in the loss function. In each inception block, different kernel sizes are utilized and combined via concatenation.

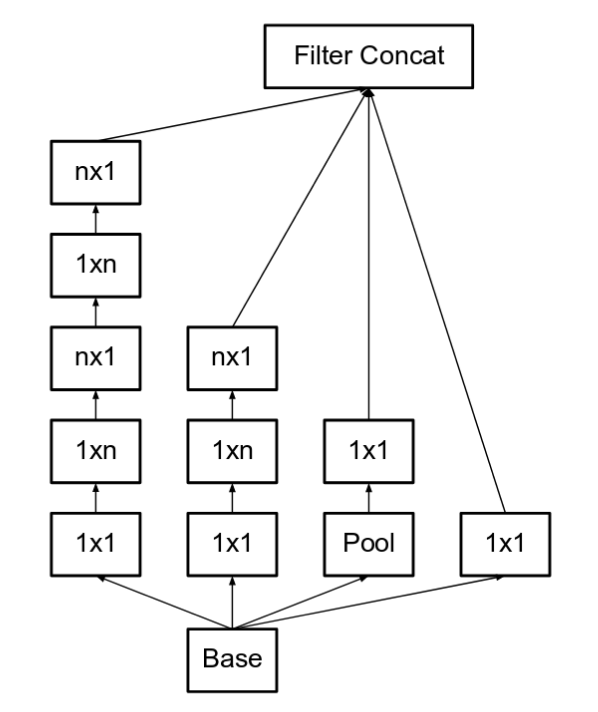

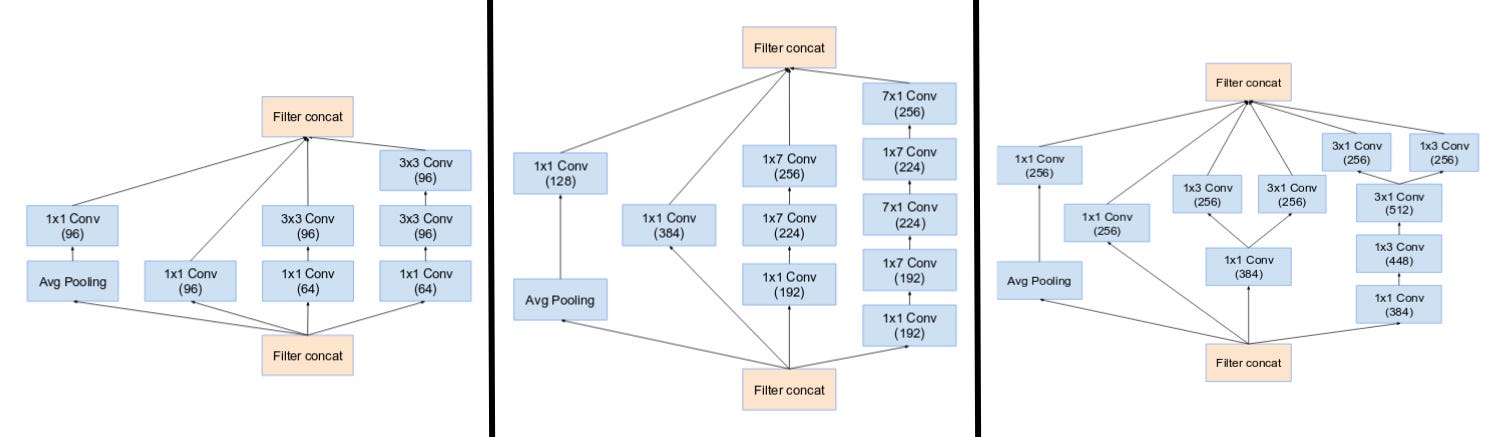

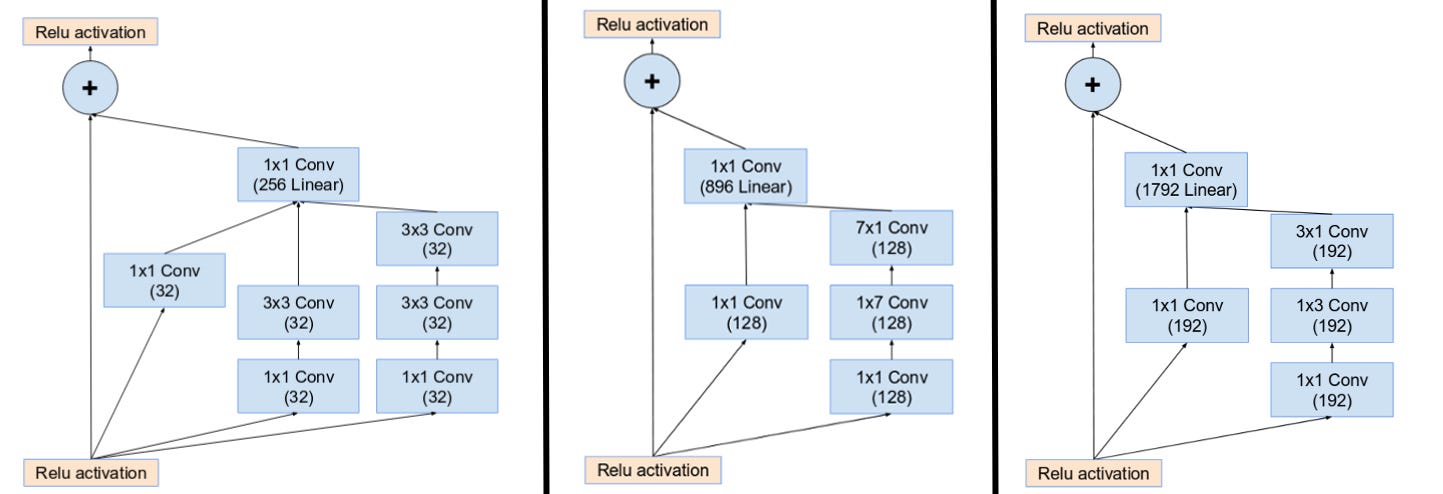

Inception V2 & V3

Inception V2 & V3 (2017) has a number of upgrades which increased the accuracy and reduced the computational complexity:

- Using two 3x3 convolution to replace 5x5 convolution

- Using separable filters, e.g., 3x3 convolution is replaced by 1x3 convolution and then 3x1 convolution

- The filter banks in the module were expanded (made wider instead of deeper) to remove the representational bottleneck.

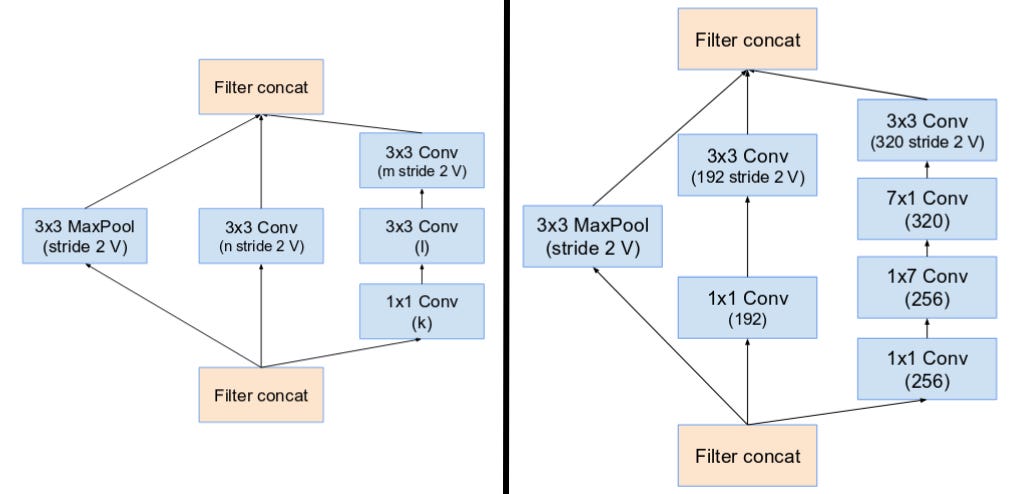

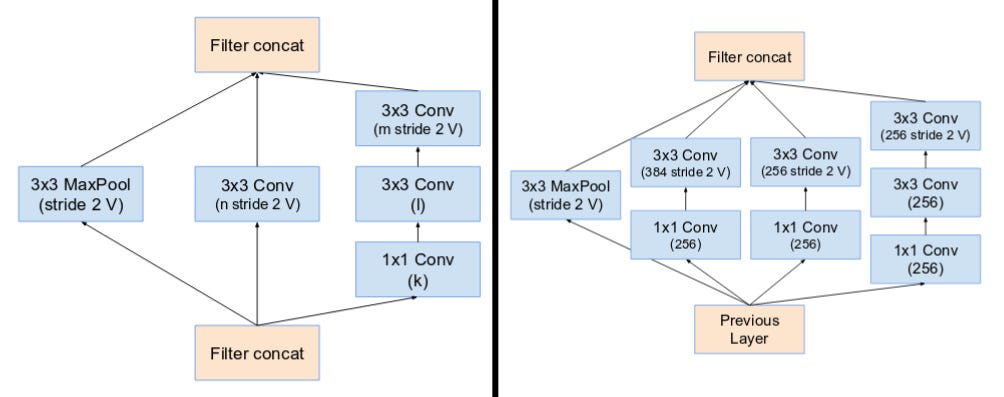

Inception v4

Inception v4 (2017) makes the modules more uniform. It is consisted of two blocks:

- inception module

- reduction block

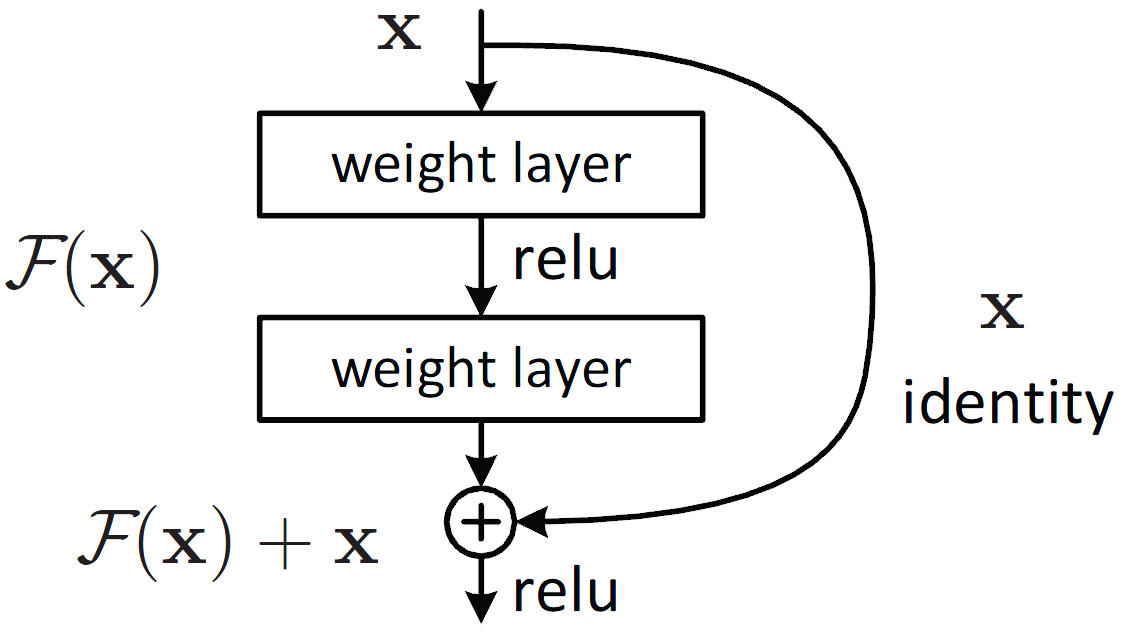

ResNet

ResNet (2016) addresses the problem of training deep neural network, where typically gradient vanishing make training deep neural network very hard. To address this problem, residual block is proposed. By having $y = f(x) + x$, inserting residual network into any network should never degrades the performance.

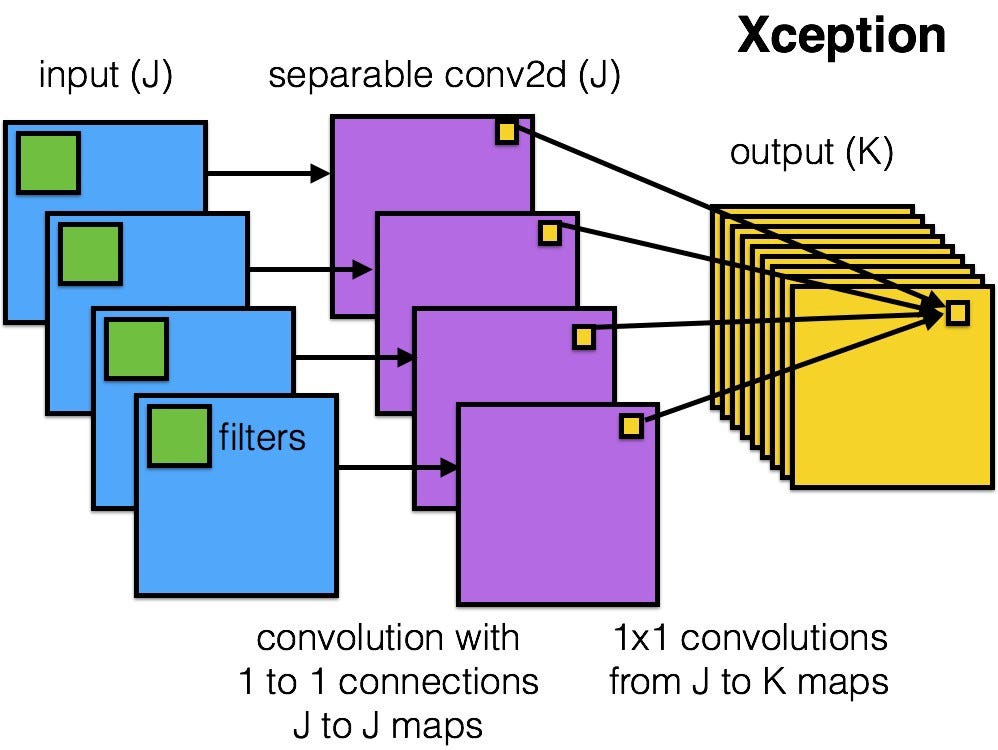

Xception

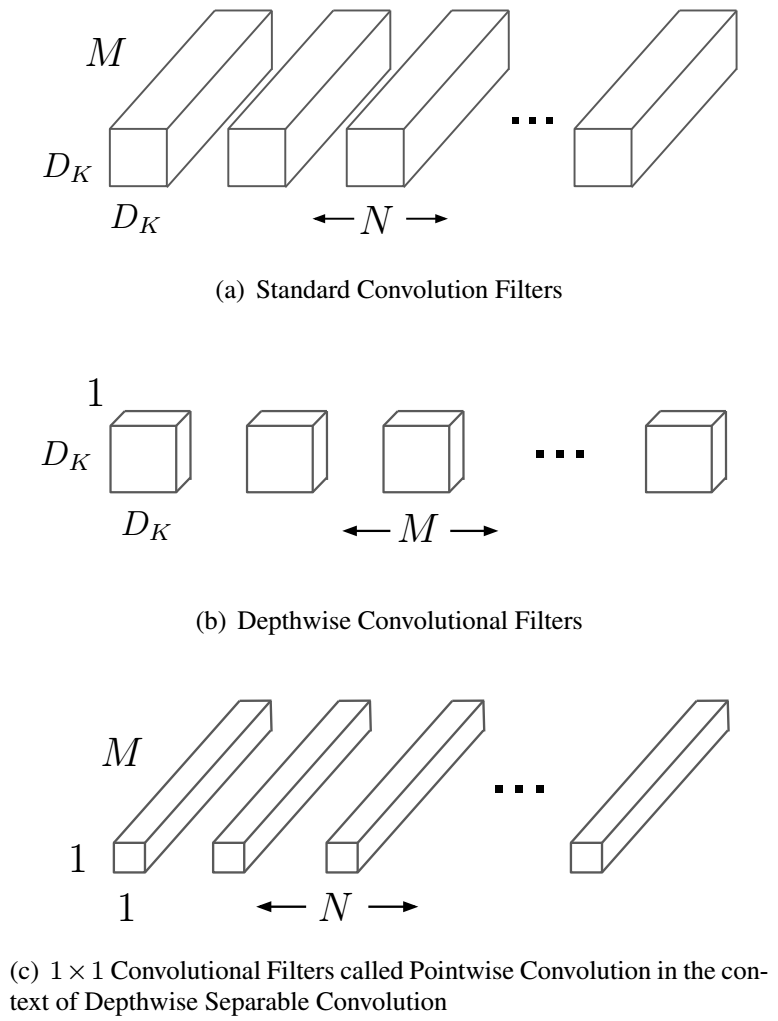

Xception (2017) mainly focus on the efficiency of convolution neural network by introducing the depthwise separable convolutions. Namely a convolution with kxk kernel and n output channels is divided into two stage:

- depthwise separable convolutions: convolution with kxk kernel is performed for each channel independently. This stage mainly extracts spatial information.

- pointwise convolution: convolution with 1x1 kernel is performed for all channel together. This stage mainly fuses the information cross channels.

The computational cost is $\frac{1}{k\times k} + \frac{1}{n}$ of the original convolution.

MobileNet

MobileNet (2017) applies the same idea to design a network effcient enough to run on a mobile device.

Inception-ResNet

Inception-ResNet (2017) applies the idea of residual blocks to Inception net. It is consisted of two blocks:

- inception module

- reduction block

For differences of all types of Inception network, please read this awesome article A Simple Guide to the Versions of the Inception Network

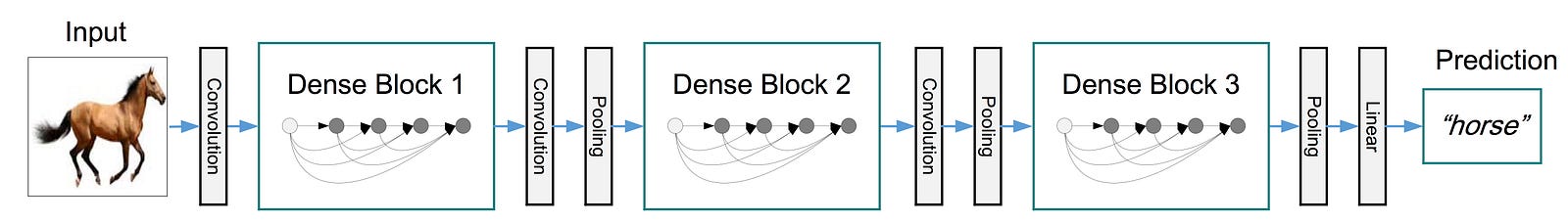

DenseNet

DenseNet (2017) addresses the gradient vanish problem in a different way compared with ResNet: ResNet using sum to combine the output of previous layer and output of current layer as the input of next layer; DenseNet using concatenation to combine the outputs of different layers. In a dense block of DenseNet, the input of layer is the concatnation of outputs of ALL previous layers.

It alleviates the vanishing-gradient problem, strengthen feature propagation, encourage fea- ture reuse, and substantially reduce the number of parameters. The reason for less parameters is that, with dense net narrower filters can be used (less output channels).

Since the number of input channels increase quadratically with regards to layer within the dense block, a bottleneck layer is introduced to reduce the number of feature channels, which is essentially convolution layer with $1\times 1$ kernel size and less output channels than input ones.

NASNet

NASNet (2017) refers to the network architecture leaned via network architecture learning methods. NASNet architecture is composed of two types of layers: Normal Layer (left), and Reduction Layer (right). These two layers are designed by AutoML.

MobileNet V2

MobileNet V2 (2018) combines the MobileNet V1 and ResNet: in addition to using depthwise separable convolution as efficient building blocks, using linear bottlenecks between the layers (to reduce the feature channels), and using shortcut connections between the bottlenecks.