Deep Learning Hardware for Embedded

[movidius jetson xilinx ultra96 zynq pynq edge-tpu hardware intel deep-learning embedded raspberry-pi nvidia tpu If you want to run deep learning inference for some embedded system, there are several possiblities now, e.g., Rapberry Pi.

Raspberry Pi

I would suggest go for a Model 3 B+ for deep learning inference tasks.

- Manufacture: Raspberry

- Framework: Tensorflow, Caffe

- Support format: FP32

- Price: $35 on Adafruit

- Spec:

- Broadcom BCM2837B0, Cortex-A53 (ARMv8) 64-bit SoC @ 1.4GHz

- 1GB LPDDR2 SDRAM

- 2.4GHz and 5GHz IEEE 802.11.b/g/n/ac wireless LAN, Bluetooth 4.2, BLE

- Gigabit Ethernet over USB 2.0 (maximum throughput 300 Mbps)

- Extended 40-pin GPIO header

- Full-size HDMI

- 4 USB 2.0 ports

- CSI camera port

- DSI display

- 4-pole stereo output and composite video port

- Micro SD port

Edge-TPU

- Manufacture: Google

- Framework: Tensorflow

- Support format: Int8, Int16

- Price: $149 for the developer kit

- Spec:

- NXP i.MX 8M SOC (Quad-core Cortex-A53, plus Cortex-M4F)

- GC7000 Lite Graphics

- Google Edge TPU ML accelerator coprocessor

- Wi-Fi 2x2 MIMO (802.11b/g/n/ac 2.4/5GHz) and Bluetooth 4.1

- 8GB eMMC

- 1GB LPDDR4

- USB Type-C power port (5V DC), USB 3.0 Type-C OTG port, USB 3.0 Type-A host port, USB 2.0 Micro-B serial console port

- HDMI 2.0a (full size), 39-pin FFC connector for MIPI DSI display (4-lane), 24-pin FFC connector for MIPI CSI-2 camera (4-lane)

- MicroSD card slot

- Gigabit Ethernet port

- 40-pin GPIO expansion header (Raspberry Pi Compatible)

- Supports Mendel Linux (derivative of Debian)

Intel Neural Compute Stick

- Manufacture: Intel

- Framework: Caffe, Tensorflow, MXNet, ONNX

- Support Format: FP16, Int8

- Driver: Ubuntu* 16.04.3 LTS (64 bit), CentOS* 7.4 (64 bit), and Windows® 10 (64 bit)

- Price: $99 sold here

- Spec:

- Myraid X, 16 VPU

- USB 3.0 Type-A

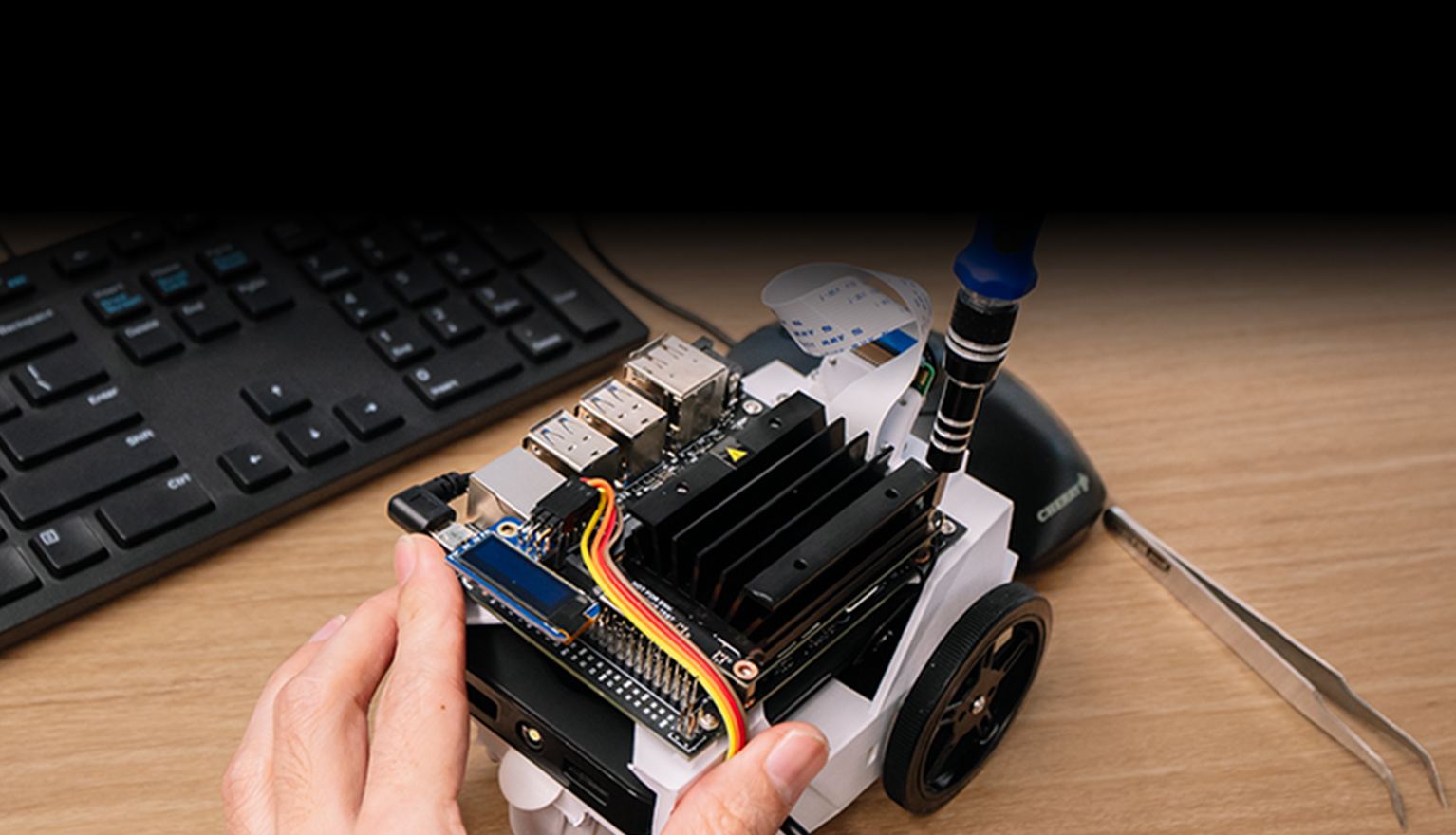

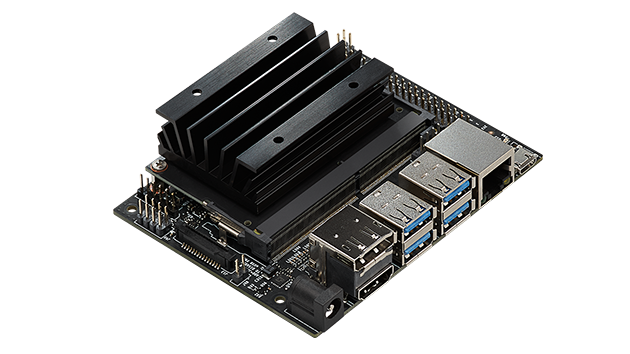

Nvidia Jetson Nano

- Manufacture: Nvidia

- Framework: Caffe, Tensorflow, MXNet, ONNX

- Support Format: FP16, Int8

- Driver: Ubuntu* 16.04.3 LTS (64 bit), CentOS* 7.4 (64 bit), and Windows® 10 (64 bit)

- Price: $99 sold here

- Spec:

- NVIDIA Maxwell™ architecture with 128 NVIDIA CUDA® cores

- Quad-core ARM® Cortex®-A57 MPCore processor

- 4 GB 64-bit LPDDR4

- 16 GB eMMC 5.1 Flash

- Video encode 4K @ 30 (H.264/H.265) and video decode 4K @ 60 (H.264/H.265)

- 12 lanes (3x4 or 4x2) MIPI CSI-2 DPHY 1.1 (1.5 Gbps)

- USB Type-A (1x USB 3.0, 3x USB 2.0), USB 2.0 Micro-B

- 1x MIPI CSI-2 DPHY lanes

- Gigabit Ethernet, M.2 Key E (1 x1/2/4 PCIE, for WiFi, Bluetooth or SSD)

- microSD

-

HDMI 2.0 or DP1.2 eDP 1.4 DSI (1 x2) 2 simultaneous - 40-pin GPIO expansion header (1x SDIO / 2x SPI / 6x I2C / 2x I2S / GPIOs)

Ultra96

Ultra96 is a board based on Xilinx Zynq Ultra, which as both ARM cores and FPGA fabrics.

- Manufacture: Xilinx

- Framework: PYTHON PRODUCTIVITY FOR ZYNQ (PYNQ) is a framework developed by Xilinx for performing machine learning on Zynq FPGA boards. Examples: FINN, QNN, BNN

- Support Format: FP16, Int8

- Driver: Ubuntu* 16.04.3 LTS (64 bit), CentOS* 7.4 (64 bit), and Windows® 10 (64 bit)

- Price: $249 sold Avnet

- Spec:

- Xilinx Zynq UltraScale+ MPSoC ZU3EG A484

- Quad-core ARM® Cortex™-A53 MPCore™ up to 1.5GHz

- Dual-core ARM Cortex-R5 MPCore™ up to 600MHz

- 154K logic cell, 141K flip-flop, 71K LUT, 360 DSP

- Micron 2 GB (512M x32) LPDDR4 Memory

- Delkin 16 GB microSD card + adapter

- Microchip Wi-Fi / Bluetooth

- Mini DisplayPort (MiniDP or mDP

- 1x USB 3.0 Type Micro-B upstream port

- 2x USB 3.0, 1x USB 2.0 Type A downstream ports

- 40-pin 96Boards Low-speed expansion header

- 60-pin 96Boards High-speed expansion header

- Xilinx Zynq UltraScale+ MPSoC ZU3EG A484

Performance Comparison

According the experiment by Sam Sterckval, the Tensor Edge chip has much better inference performance than all the others, including Jetson Nano, and comparable to Nvidia GTX 1080.

Written on April 13, 2019