Letter perception emerges from unsupervised deep learning and recycling of natural image features

[ ]There is a recent paper on Nature on unsupervised deep learning of recognizing letter images.

Abstract

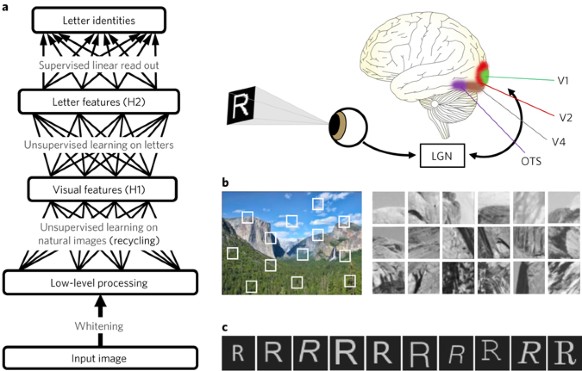

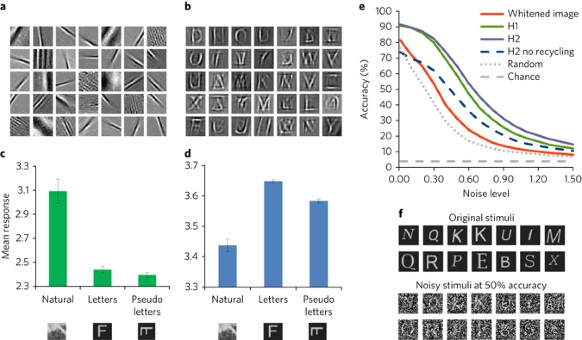

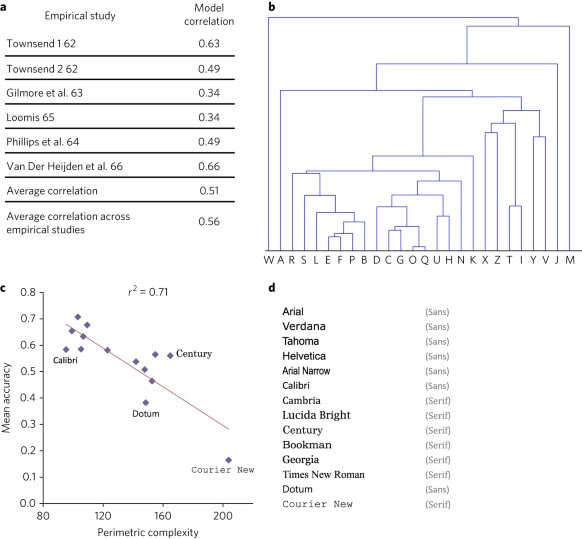

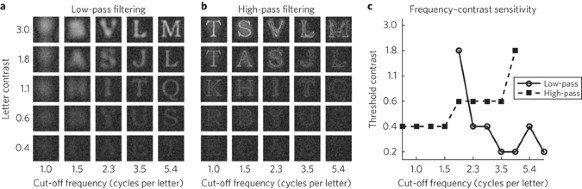

The use of written symbols is a major achievement of human cultural evolution. However, how abstract letter representations might be learned from vision is still an unsolved problem1,2. Here, we present a large-scale computational model of letter recognition based on deep neural networks3,4, which develops a hierarchy of increasingly more complex internal representations in a completely unsupervised way by fitting a probabilistic, generative model to the visual input5,6. In line with the hypothesis that learning written symbols partially recycles pre-existing neuronal circuits for object recognition7, earlier processing levels in the model exploit domain-general visual features learned from natural images, while domain-specific features emerge in upstream neurons following exposure to printed letters. We show that these high-level representations can be easily mapped to letter identities even for noise-degraded images, producing accurate simulations of a broad range of empirical findings on letter perception in human observers. Our model shows that by reusing natural visual primitives, learning written symbols only requires limited, domain-specific tuning, supporting the hypothesis that their shape has been culturally selected to match the statistical structure of natural environments8.

Method