NeRF in the Wild

[cnn mlp nerf deep-learning differential-rendering This note discusses NeRF in the Wild: Neural Radiance Fields for Unconstrained Photo Collections. NeRF-W addresses the central limitation of NeRF that we address here is its assumption that the world is geometrically, materially, and photometrically static — that the density and radiance of the world is constant. NeRF-W instead models per-image appearance variations (such as exposure, lighting, weather) as well as model the scene as the union of shared and image-dependent elements, thereby enabling the unsuper- vised decomposition of scene content into “static” and “transient” components.

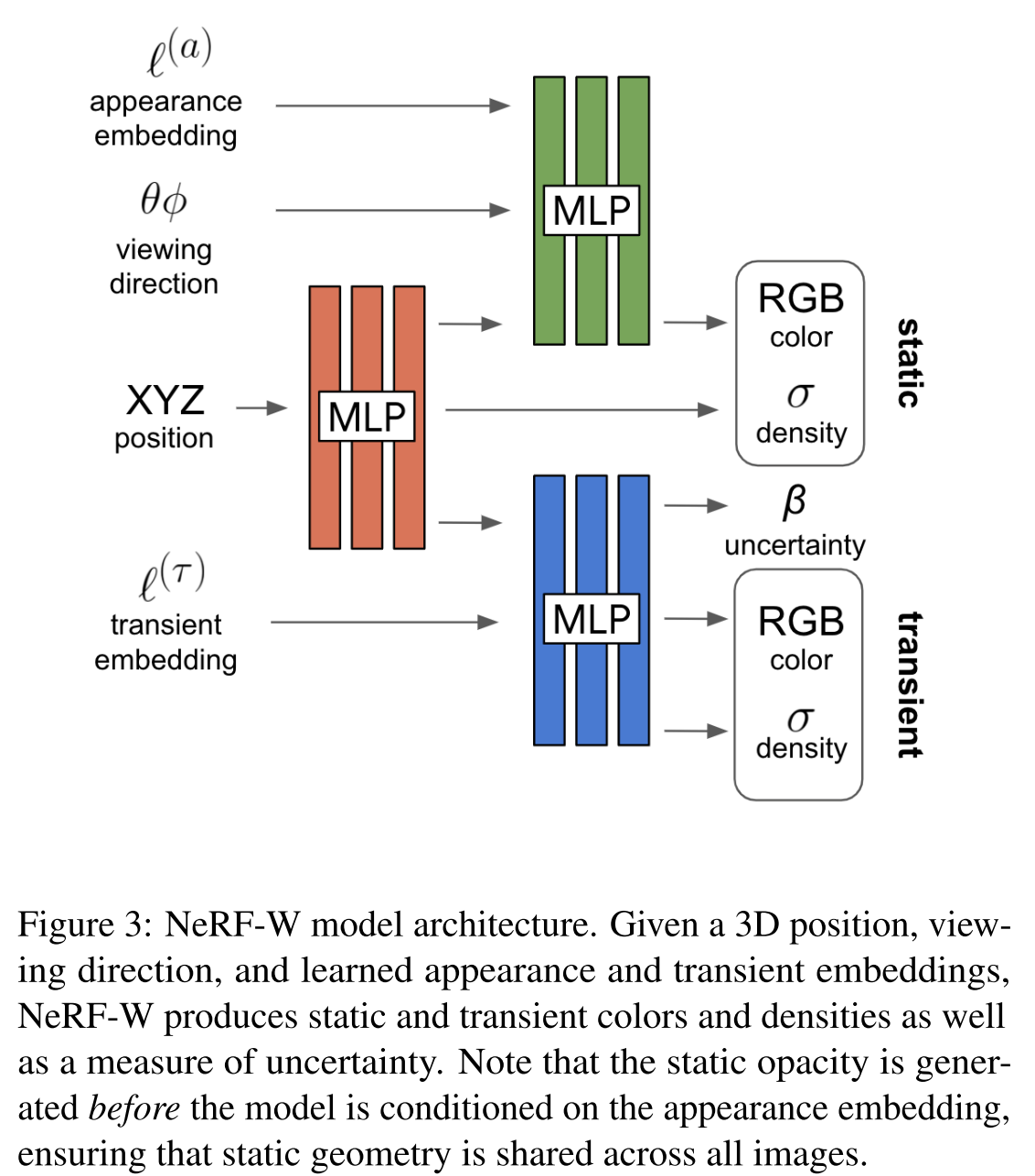

The architecture of NeRF-W is shown as below:

To remove the assumption of scene being static, NeRF-W introduces two improvements:

- per-image appearance variations: it utilizes per-image appearance embedding (learned by the model) and appearance MLP (green) to model the per image appearance variation due to exposure, lighting, weather;

- transient components besides the static scene (such as pedestrain): it utlizes per image translient embedding and transient MLP (blue) to model the transient objects in the images. In addition, it also output uncertainty to mask the transient objects.

Note, different from vanilla NeRF, this paper divides the neural network into two parts: red MLP captures the occupancy/density and green MLP captures the appearance.

The dimension of the embedding is 48 for appearance embedding and 16 for transient embedding.

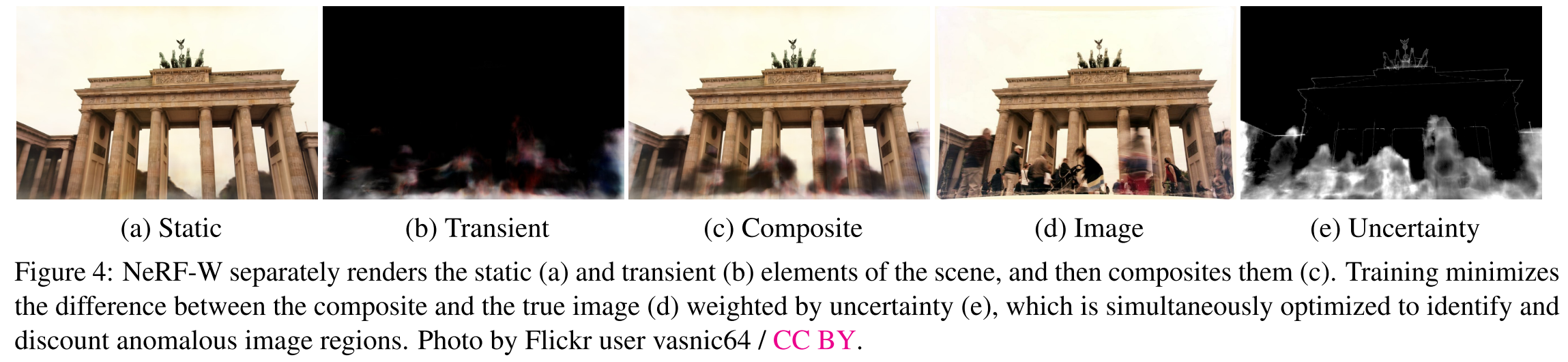

This figure shows the impact of each component.