Optical Flow

[klt flownet deep-learning optical-flow lucas-kanade

Optical flow or optic flow is the pattern of apparent motion of objects, surfaces, and edges in a visual scene caused by the relative motion between an observer and a scene. Optical flow can also be defined as the distribution of apparent velocities of movement of brightness pattern in an image.

In this post, we will introduce some optical flow algorithms, from oldest one to latest one.

Lucas-Kanade

Lucas-Kanade is most classical methods for computing the optical flow. Its assumes that, the illumination of the same object in the same frame doesn’t change. That is:

By Taylor expansion, we have:

where $I_x$ and $I_y$ is the partial gradient in x and y direction, $I_t^’\Delta_t$ is the difference of illumination between two frames.

However, there are infinite number of solutions to the problem above. To resolve this problem, it further assumes the optical flow is consistent for a local region. Thus we have:

FlowNet

Convolutional neural networks (CNNs) have recently been very successful in a variety of computer vision tasks, especially on those linked to recognition. Optical flow estimation has not been among the tasks where CNNs were successful. In this paper we construct appropriate CNNs which are capable of solving the optical flow estimation problem as a supervised learning task. We propose and compare two architectures: a generic architecture and another one including a layer that correlates feature vectors at different image locations. Since existing ground truth data sets are not sufficiently large to train a CNN, we generate a synthetic Flying Chairs dataset. We show that networks trained on this unrealistic data still generalize very well to existing datasets such as Sintel and KITTI, achieving competitive accuracy at frame rates of 5 to 10 fps.

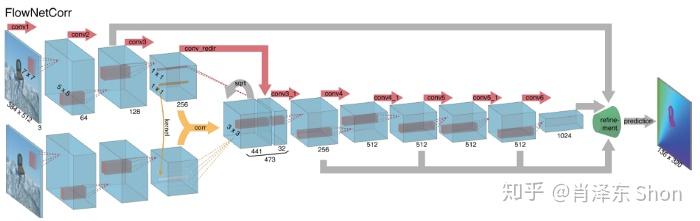

FlowNet is the first end-to-end neural network model for computing optical flow. To overcome the limits of ground truth optical flow data, it uses synthetical data. Two types of models, both based on encoder-decode, were proposed:

- combine two adjacent frames in channels

- perform feature extraction in two adjacent frames in independent branches the combined together via a correlation operation (block matching) in 21x21 neighbor

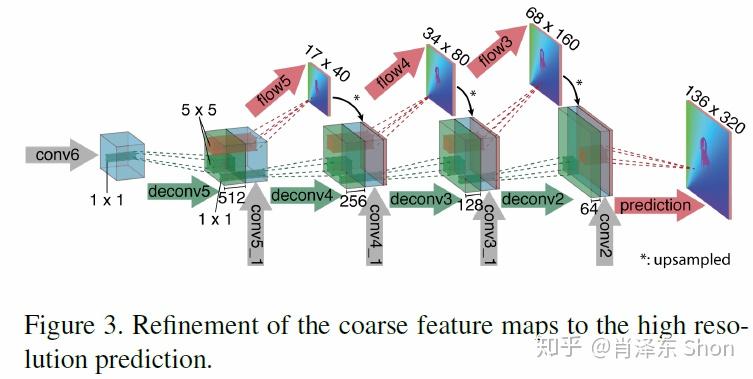

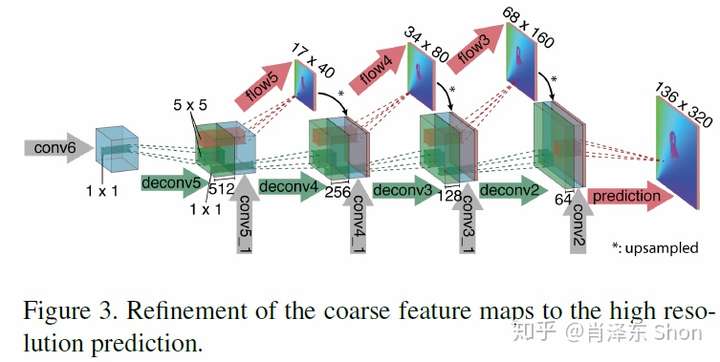

The refinement module is shown below:

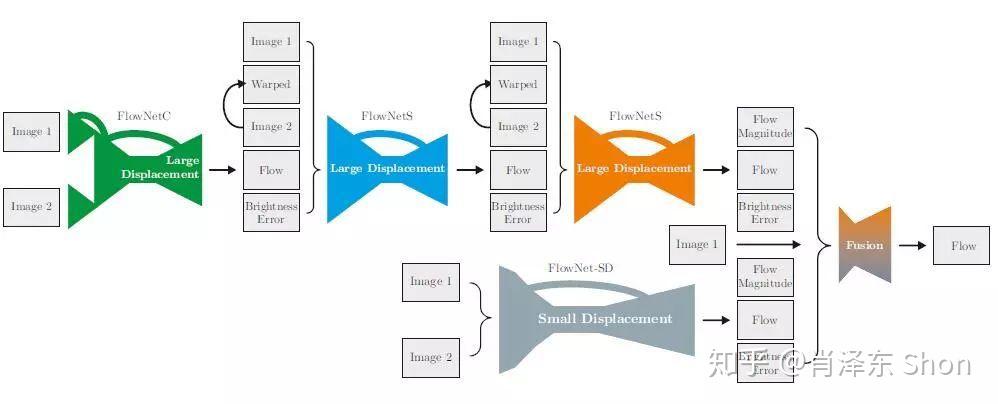

FlowNet2.0

The FlowNet demonstrated that optical flow estimation can be cast as a learning problem. However, the state of the art with regard to the quality of the flow has still been defined by traditional methods. Particularly on small displacements and real-world data, FlowNet cannot compete with variational methods. In this paper, we advance the concept of end-to-end learning of optical flow and make it work really well. The large improvements in quality and speed are caused by three major contributions: first, we focus on the training data and show that the schedule of presenting data during training is very important. Second, we develop a stacked architecture that includes warping of the second image with intermediate optical flow. Third, we elaborate on small displacements by introducing a sub-network specializing on small motions. FlowNet 2.0 is only marginally slower than the original FlowNet but decreases the estimation error by more than 50%. It performs on par with state-of-the-art methods, while running at interactive frame rates. Moreover, we present faster variants that allow optical flow computation at up to 140fps with accuracy matching the original FlowNet.

FlowNet2.0 improves the FlowNet in the following two aspects:

- higher speed via coarse-to-fine;

- reducing the small displacement.

NVIDIA Optical Flow SDK

NVIDIA Optical Flow SDK exposes a new set of APIs for this hardware functionality:

- C-API – Windows and Linux

- Windows – CUDA and DirectX

- Linux – CUDA

- Granularity: 4x4 vectors at ¼ pixel resolution

- Raw vectors – directly from hardware

- Pre-/post-processed vectors via algorithms to improve accuracy

- Accuracy: low average EPE (End-Point-Error)

- Robust to intensity changes