Unsupervised Domain Adaption

[advserial normalization discrepancy cvpr self-supervise tutorial reconstruction deep-learning 2021 optimal-transport teacher-student domain-adaption This is my reading note on CVPR 2021 Tutorial: Data- and Label-Efficient Learning in An Imperfect World. The original slides and videos are available online. Unsupervised domain adaption methods could be divided into the following groups:

-

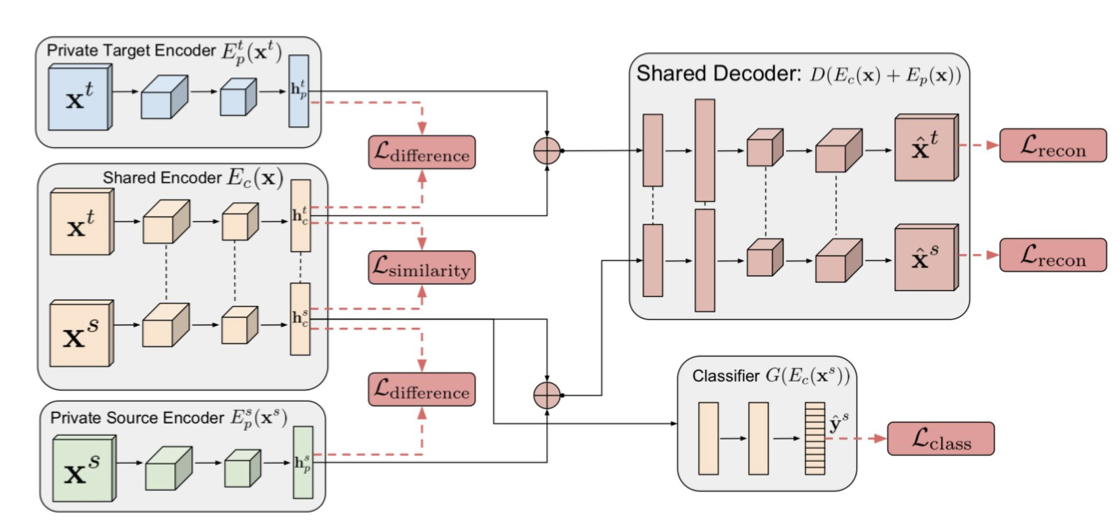

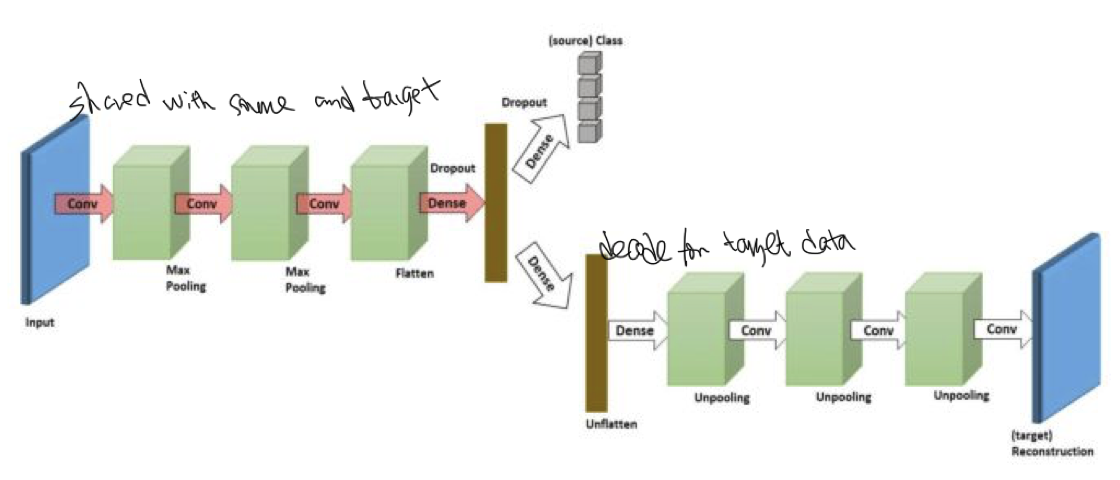

reconstruction based methods: uses auxiliary reconstruction task to ensure domain-invariant features.

-

Deep Separation Networks

-

Deep Reconstruction Classification Network

-

-

Discrepancy-based methods: align domain data representations with statistical measures

-

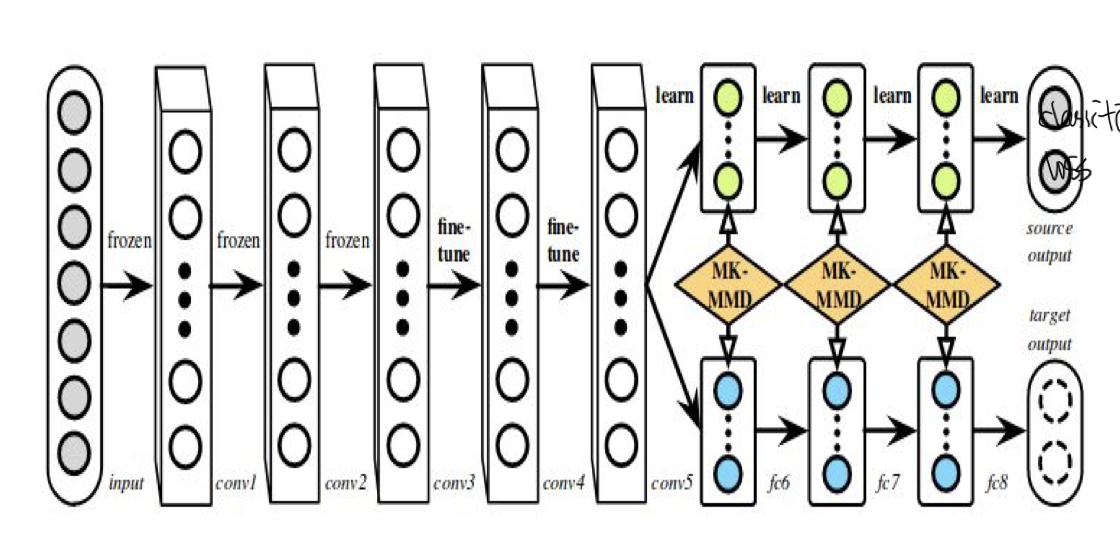

Deep Adaptation Networks: using MMD kernel for measures

-

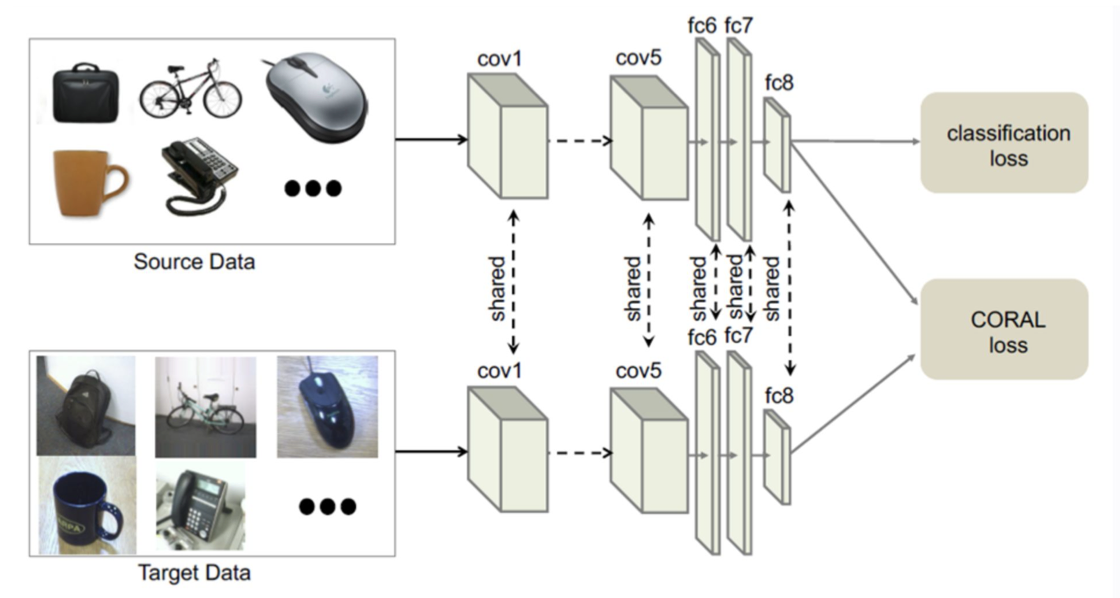

Deep CORAL: Correlation Alignment: using 2nd-order measurement

-

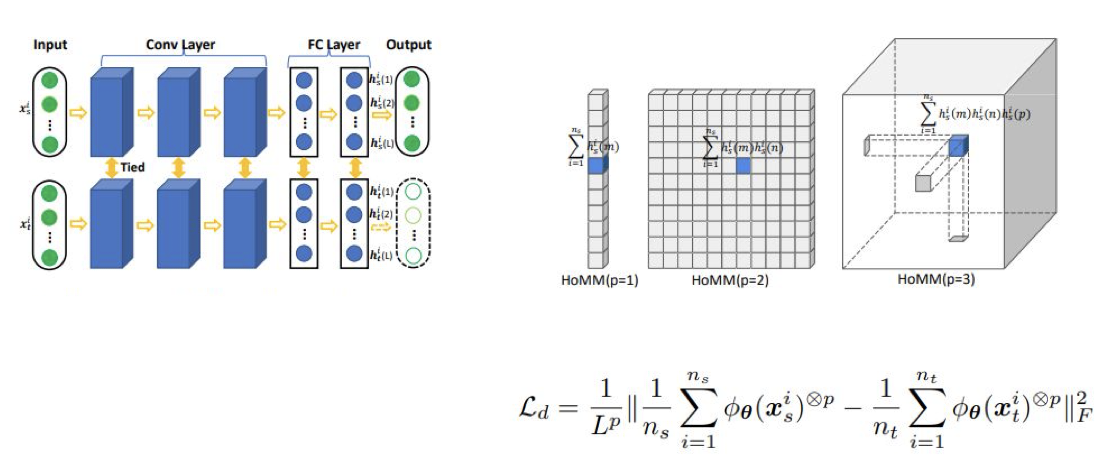

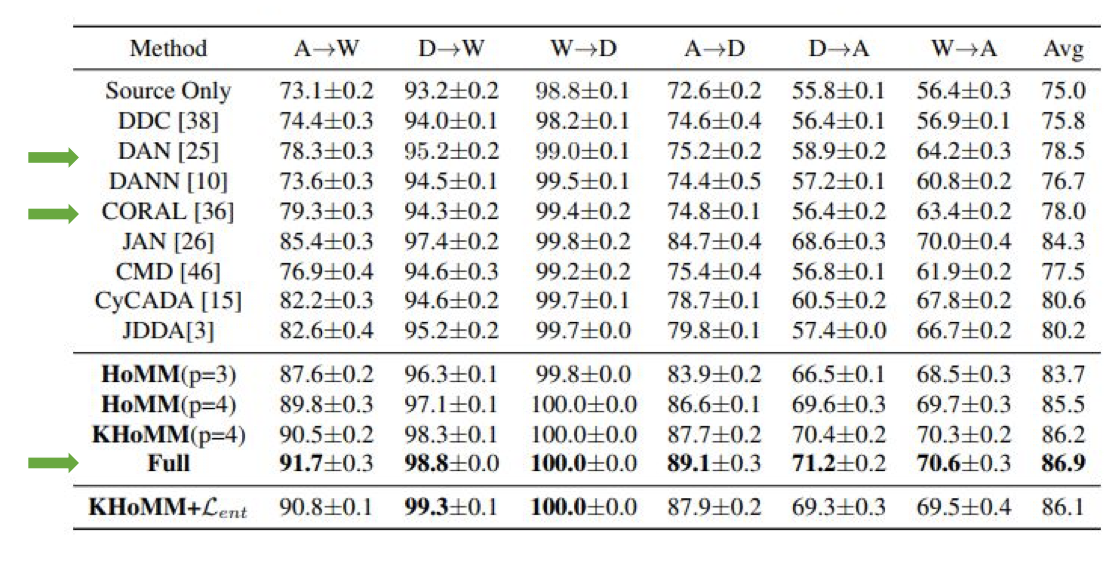

HoMM: Higher-order Moment Matching: using even higher order. It is found higher order gives better result.

-

-

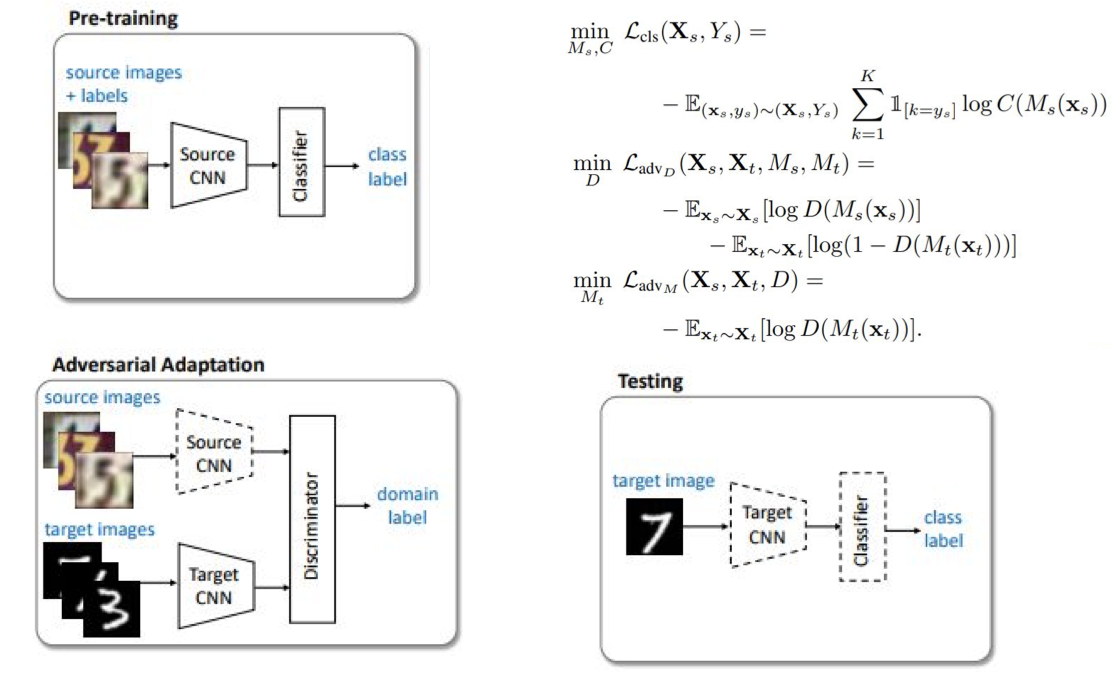

Adversarial-based Methods: involve a domain discriminator to enforce domain confusion while learning representations

-

Feature based: doesn’t try to reconstruct the images cross domains

-

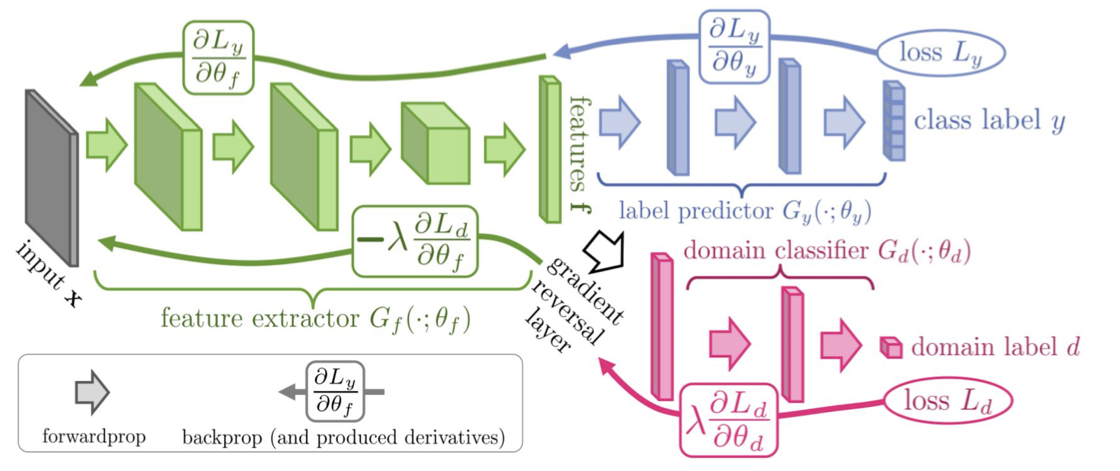

Domain Adversarial Network: feature extractor is shared cross domains and use domain classifier to estimate the domain.

-

Adversarial Discriminative DA: feature extractor is seperated between domains.

-

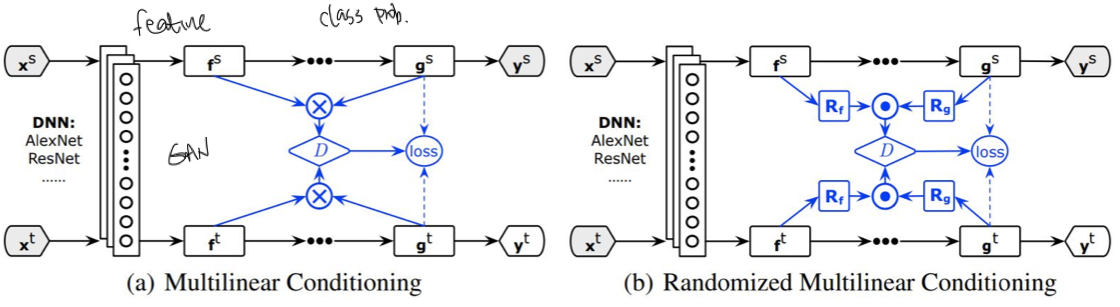

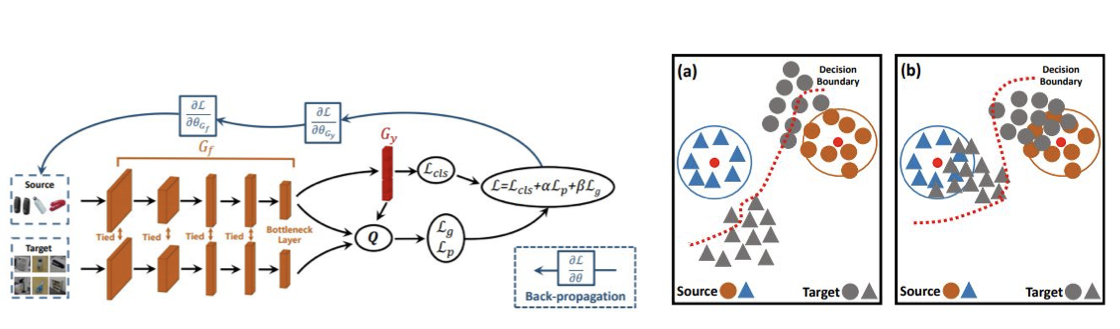

Conditional domain adversarial network (CDAN)

-

-

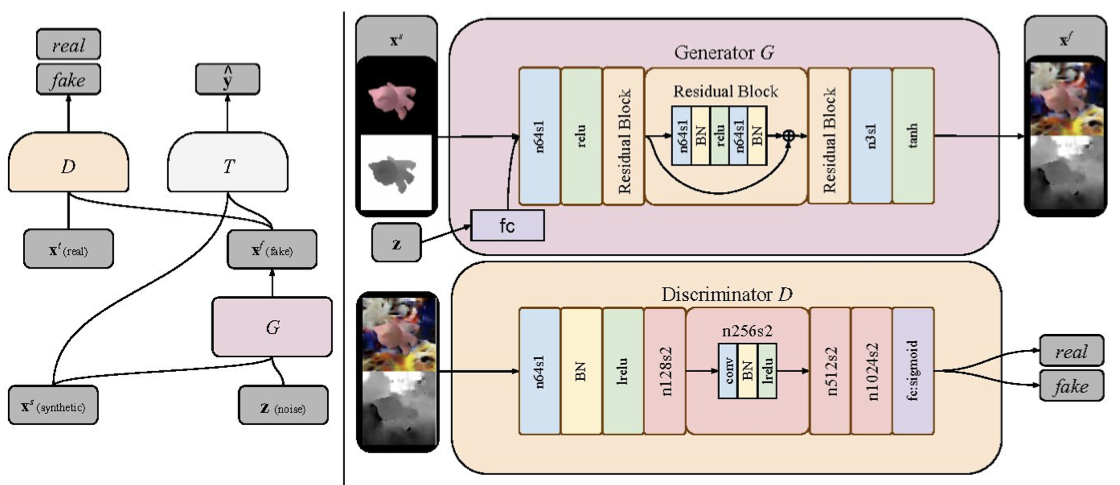

Reconstruction based: use GAN to generate fake images cross domain

-

Pixel DA: Leverages GAN to generate fake target images from source images (conditional GAN)

-

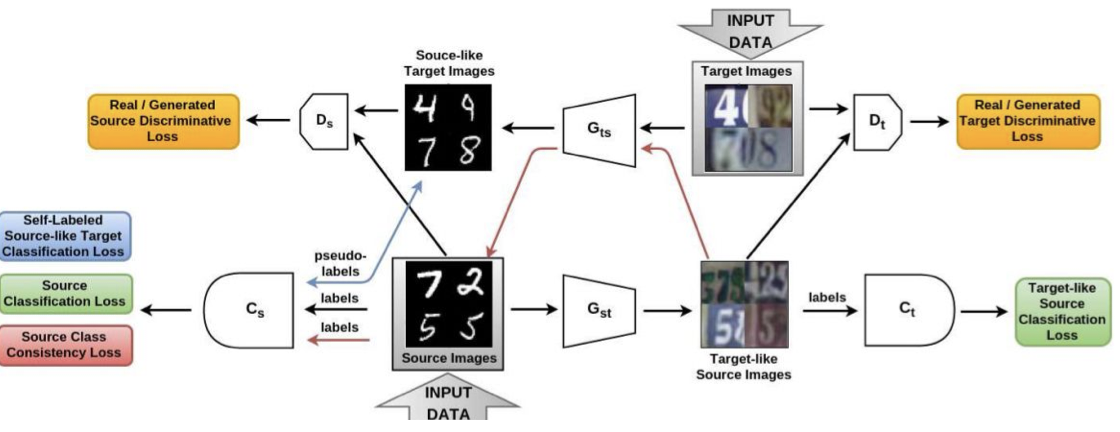

Cycle-consistency: SBADA-GAN: use cycle-gan to generate images cross domain

-

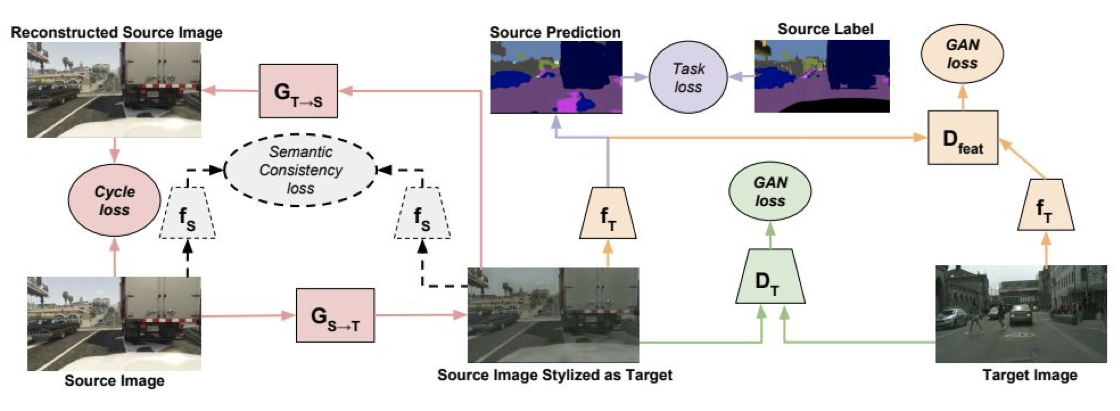

Cycle-consistency: CyCADA: adds a semantic loss

-

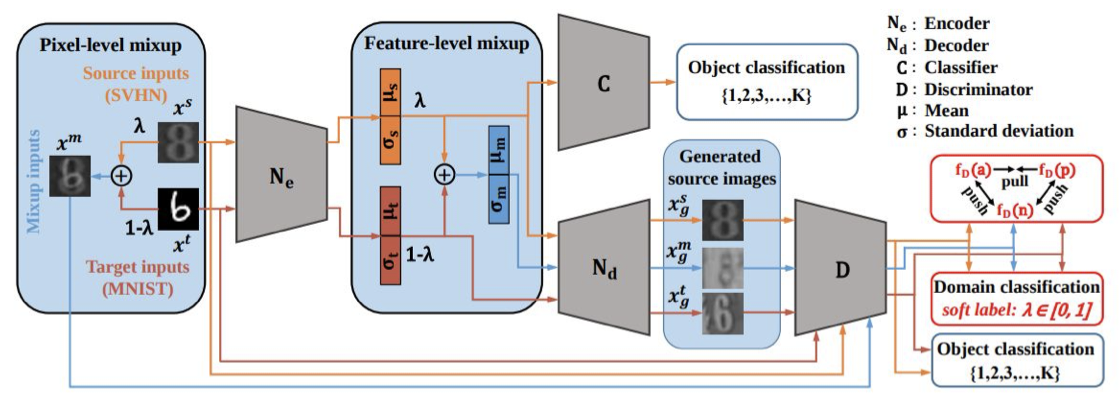

Adversarial domain adaptation with domain mixup (DM-ADA): mixup samples obtained interpolating between source and target images and domain discriminator learns to output soft scores

-

-

-

Normalization-based methods: embed in the network some domain alignment layers

- DomaIn distribution Alignment Layers: each domain has their own parameter for batch norm layer, thus the data distribution is aligned.

- Automatic DomaIn Alignment Layers - AutoDIAL

- DomaIn Whitening Transform

-

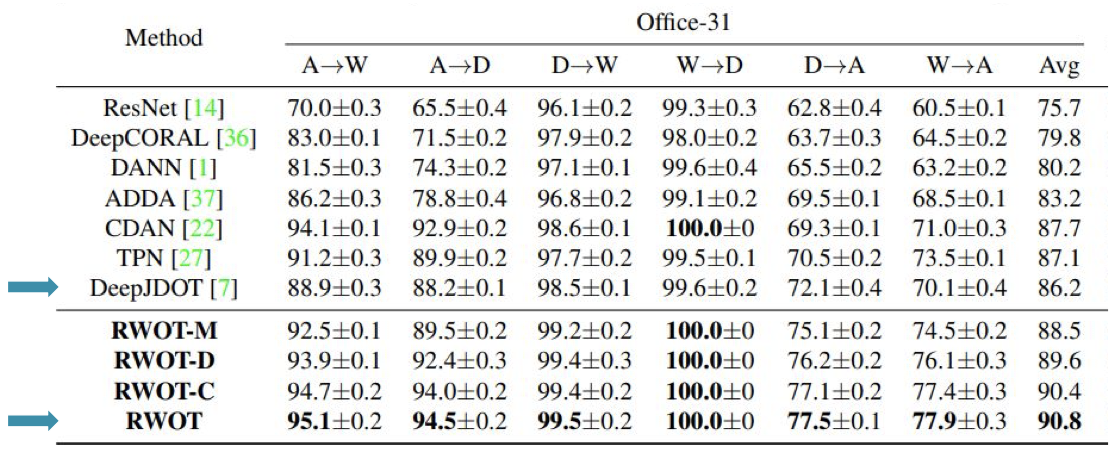

Optimal Transport-based: minimize domain discrepancy optimizing a measure based on Optimal Transport

-

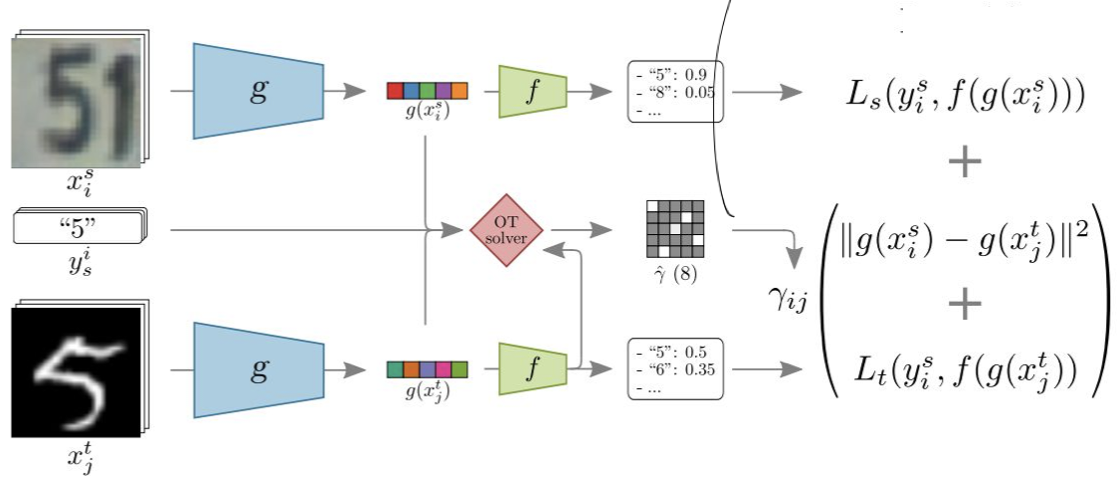

DeepJDOT

-

Reliable Weighted Optimal Transport

-

-

Teacher-Student-based: exploit the teacher-student paradigm for transferring knowledge on target data

Results

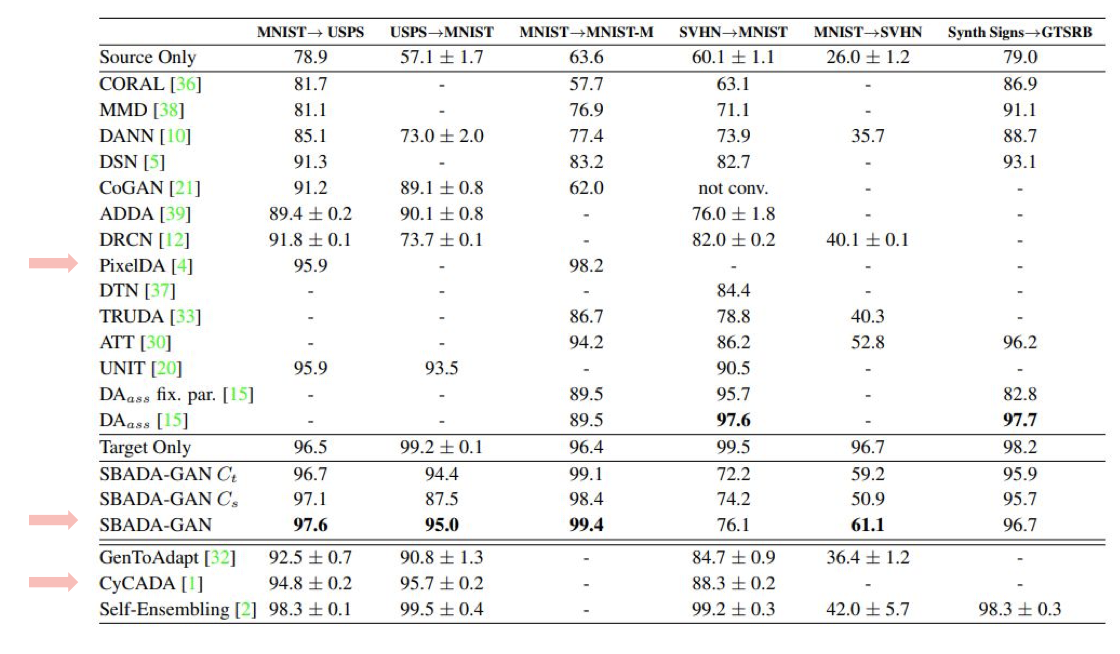

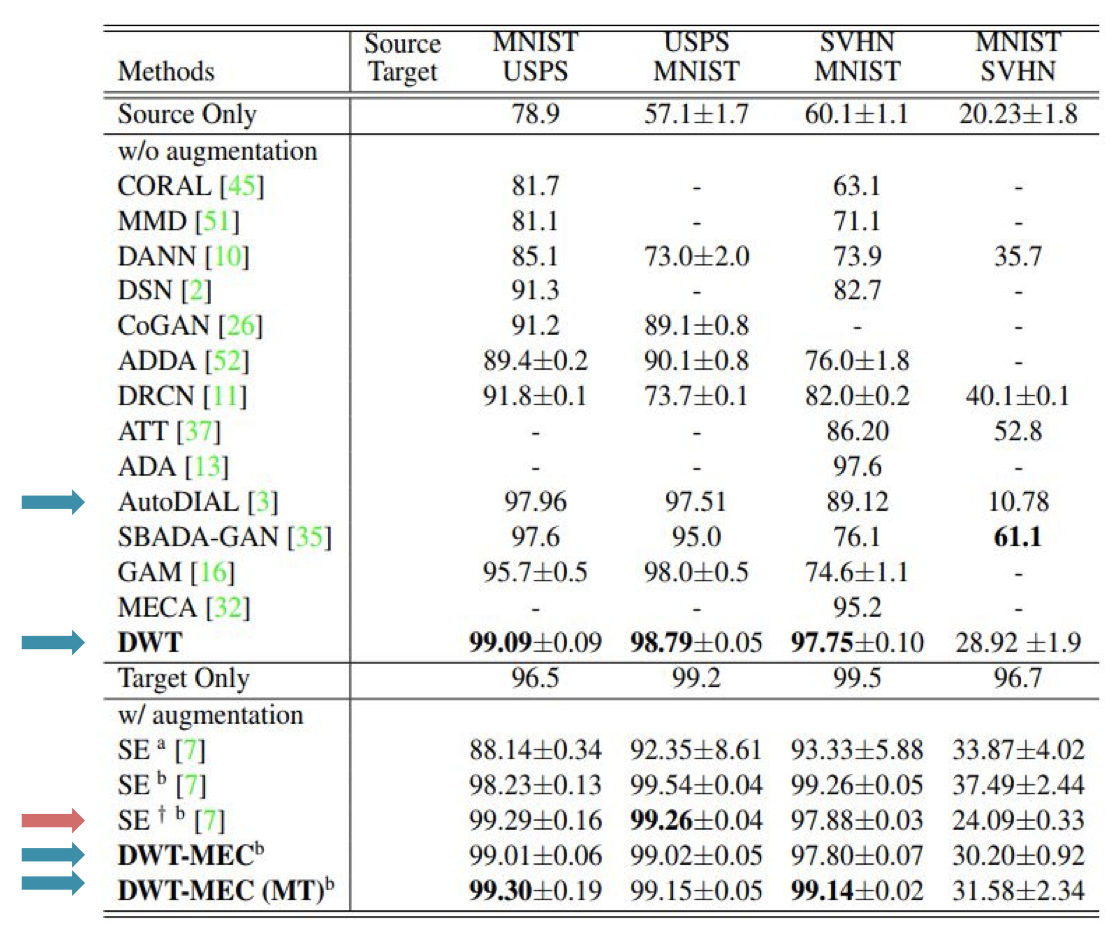

Those methods have been evaluated on two tasks: image classification on office-31 dataset and digiti recognition (similar to image classification) on digitis dataset. There are methods outperforms fully supervised baseline on each of the dataset, however, there is no approach which works well on both datasets.

Office-31

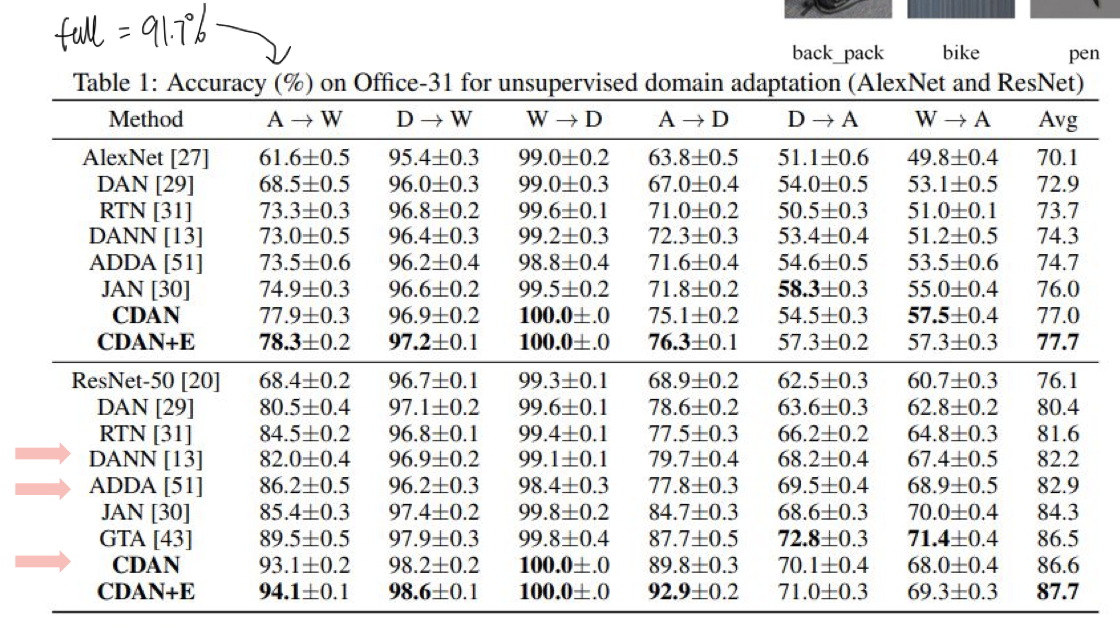

The supervised learning based method (full) reaches accuracy of 91.7%. The table shows that, some unsupervised domain adaption methods could output fully supervised learning methods, e.g.,

- Reliable Weighted Optimal Transport (RWOT): optimal transport method

- Conditional domain adversarial network (CDAN): adversial based method (without fake data generation)

Digits

Use supervised learning method on target domain, the performance is 96.5% on USPS, 96.7% on SVNH and 99.2% on MNIST. Some unsupervised domain adaption methods could output fully supervised learning methods on some domains, e.g.,

- Symmetric Bi-Directional Adaptive GAN (sbada-gan): outperforms the basline on MNIST-USPS and MINST-MNIST-M, but significantly lower on others;

- Unsupervised Domain Adaptation using Feature-Whitening and Consensus Loss (DWT): outperforms the basline on MNIST-USPS but slightly lower on others.