Ziya2 Data-centric Learning is All LLMs Need

[llm deep-learning ziya dataset denosing deduplication precision position-encoding This is my reading note for Ziya2: Data-centric Learning is All LLMs Need. This paper discusses how to improve LLM performance by improves quality of data.in addition. The supervised learning is found to be more effective than unsupervised learning.

Introduction

However, the development of LLMs still faces several issues, such as high cost of training models from scratch, and continual pre-training leading to catastrophic forgetting, etc. Although many such issues are addressed along the line of research on LLMs, an important yet practical limitation is that many studies overly pursue enlarging model sizes without comprehensively analyzing and optimizing the use of pretraining data in their learning process, as well as appropriate organization and leveraging of such data in training LLMs under cost-effective settings. (p. 1)

In this work, we focus on the technique of continual pre-training and understanding the intricate relationship between data and model performance. We delve into an in-depth exploration of how the highquality pre-training data enhance the performance of an LLM, while keeping its size and structure essentially unchanged. (p. 2)

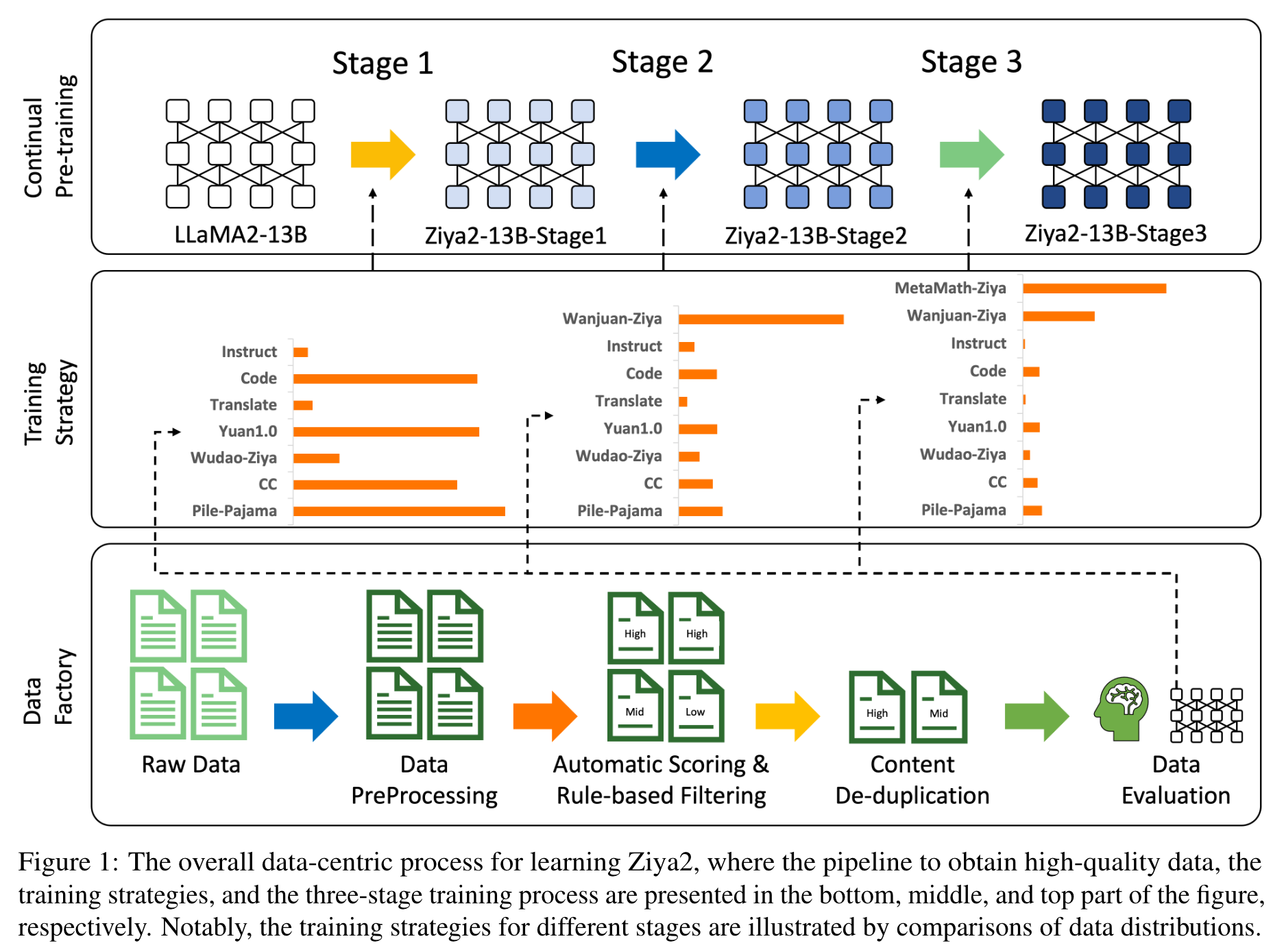

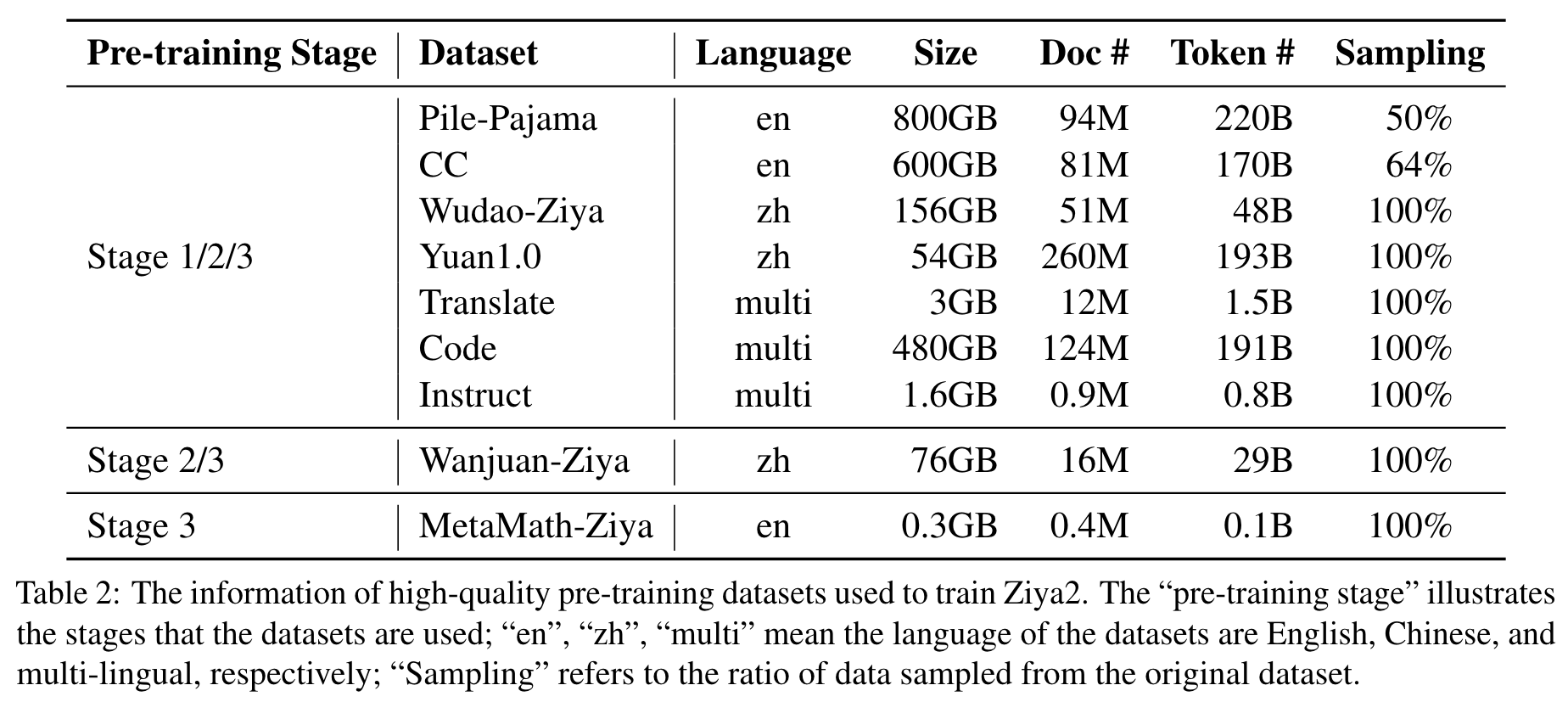

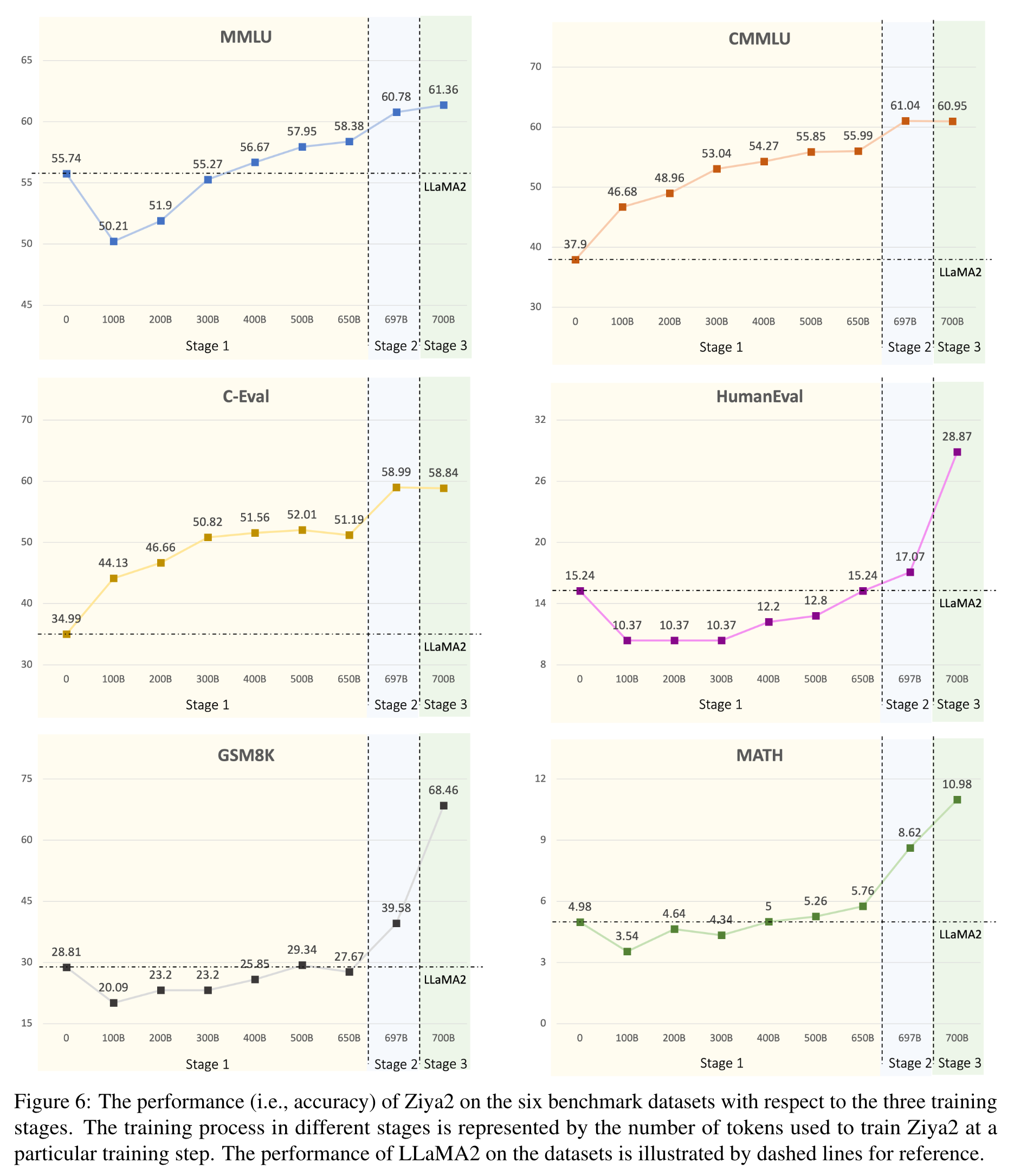

Specifically, a three-stage training process has been adopted to leverage both general and domain-specific corpora to enhance Ziya2’s bilingual generation capabilities, where the first stage trains Ziya2 with huge high-quality data, including Chinese and English languages; the second stage uses supervised data to optimize the LLM; and the third stage mainly focuses on training Ziya2 with mathematical data. (p. 2)

The Approach

it commences with the establishment of a data pipeline capable of continuous cleansing and evaluation of extensive webbased datasets, ensuring the acquisition of largescale, high-quality data for the training of Ziya2. (p. 3)

The Data Factory

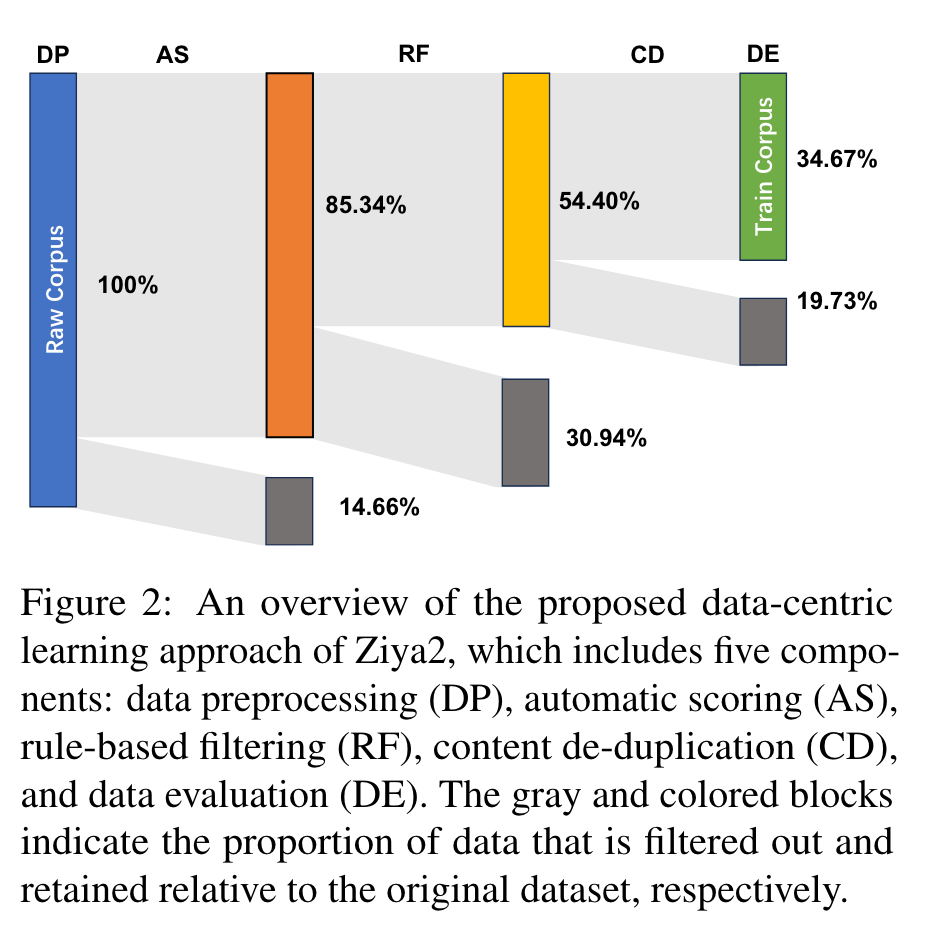

- Data Preprocessing (DP). The first is language detection on the collected corpus and selection of only Chinese and English language data. (p. 3)

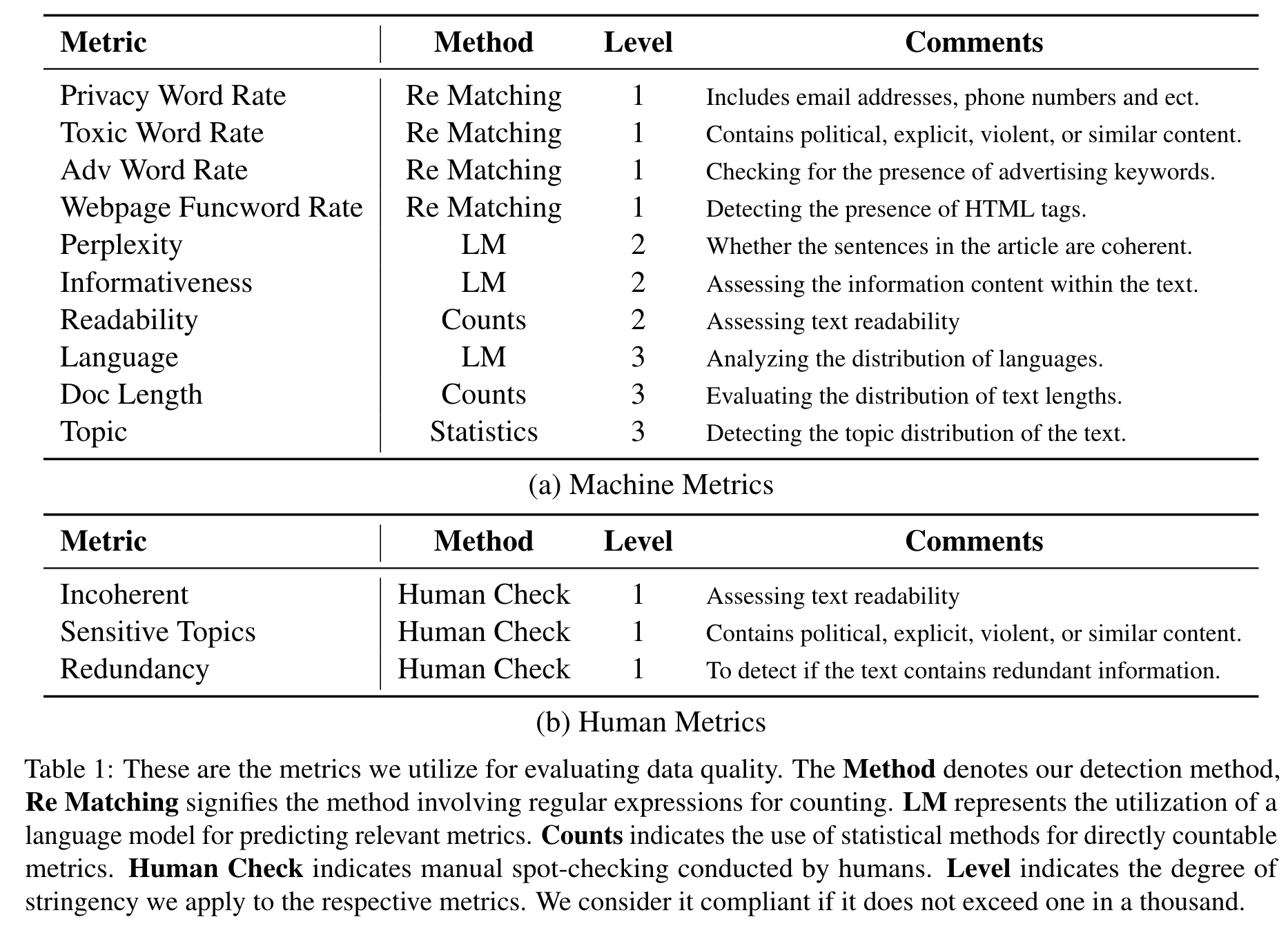

- Automatic Scoring (AS). we employ KenLM (Heafield, 2011) to train two language models from Chinese and English Wikipedia, respectively, and conduct perplexity (PPL) assessments on the input data. Subsequently, we select data based on their ranking from lower to higher PPL scores, with the top 30% data marking as high-quality and those in between 30% and 60% in their PPL ranking as medium quality. (p. 3)

- Rule-based Filtering (RF). so that it is required to eliminate text with significant tendencies toward pornography, violence, politics, and advertising. Therefore, we design more than 30 filtering rules at three granular levels: document, paragraph, and sentence, and filter out texts by applying rules from large to small granular levels. At the document level, rules are principally designed around content length and format, while at the paragraph and sentence levels, the focus of rules shifts to the toxicity of the content. (p. 3)

- Content De-duplication (CD). repetitive content does not significantly improve training and hurts training efficiency. We used Bloomfilter (Bloom, 1970) and Simhash (Charikar, 2002) to de-duplicate the text in the data through the following steps. First, we find that Common Crawl and other open-source datasets contain substantial duplication of web pages and thus use Bloomfilter5 to de-duplicate URLs, which significantly reduces the computational load required for subsequent content de-duplication. Second, our analysis shows that many remaining web pages share similar content, where the main differences among them are special characters such as punctuation marks and emoticons. Thus, we perform a round of precise deduplication on these web pages. Third, we employ SimHash for the fuzzy de-duplication of textual content for the remaining data (p. 4)

- Data Evaluation (DE). Afterwards, we compute the rate of the unqualified examples over all evaluated instances. If the rate is lower than a threshold, it proves the data meets our requirement, so we use them as a part of the training corpus. If the rate is higher than the threshold, which means the data does not meet our standard, we improve the processes of automatic scoring, rulebased filtering, and content de-duplication, which are then utilized to process data. (p. 5)

The Architecture of Ziya2

The architecture of Ziya2 is based on LLaMA2, where we propose to improve the quality of input data processing, token and hidden representations through enhancing tokenizer, positional embedding, as well as layer normalization and attention, respectively, so as to facilitate Chinese text processing, adapt to the changes in text length and data distribution, and improve its efficiency and stability in pre-training. (p. 5)

- Tokenizer. we adopt a BPE (Byte-Pair Encoding) tokenizer (Sennrich et al., 2015). For the vocabulary of the tokenizer, we reuse over 600 Chinese characters originally used in LLaMA and add extra 7,400 commonly used Chinese characters, which include both simplified and traditional Chinese characters as well as Chinese punctuation marks. (p. 5)

- Positional Embedding. LLaMA2 employs rotary position encoding (Su et al., 2021), which, through the mechanism of absolute position encoding, accomplishes relative position encoding. To avoid the overflow issues associated with mixed precision, we implement rotary position encoding using FP32 precision, thereby accommodating the variation in data length distribution in continual training. (p. 6)

- Layer Normalization and Attention. the direct implementation of mixed precision training in layer normalization and attention leads to precision overflow, which results in training instability. we improve layer normalization and attention in LLaMA2. Specifically, for layer normalization, we utilize an APEX8 RMSNorm (Zhang and Sennrich, 2019) implementation, which also operates under FP32 precision training. (p. 6)

Continual Pre-training

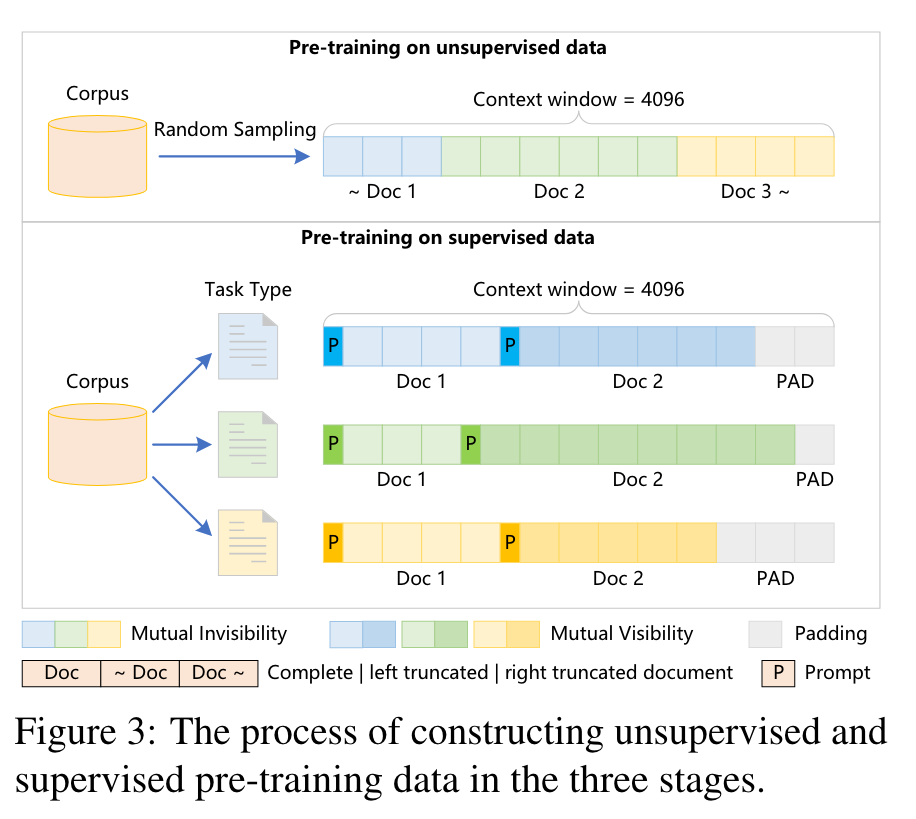

We thoroughly shuffle these datasets, concatenate different data segments into a 4,096 context as a training example, and use an attention mask to prevent different data segments from influencing each other. (p. 6)

Different from fine-tuning with instructions, we still employ the LLM’s next token prediction training method. Instead of randomly combining data as in the first stage, we concatenate the same type of instruct data into a 4,096 context as a training example, where the rest of the positions are filled by special pad tokens. To retain the knowledge already acquired by Ziya2, we also sample unsupervised Chinese and English data in the same proportion as the instruct data for continual training. (p. 7)

In the third stage, we incorporate supervised data related to inference (p. 7)

We train the model using the AdamW. due to the incorporation of additional Chinese and code data in our dataset compared to LLaMA2, there exists a disparity in the overall data distribution. As a result, a more extended warmup is beneficial for continual pre-training. Consequently, instead of the 0.4% warmup ratio utilized in LLaMA2, we adopt a warmup ratio of 1%. Followed by a cosine learning rate decay schedule, reaching a final learning rate of 1e −5. (p. 7)

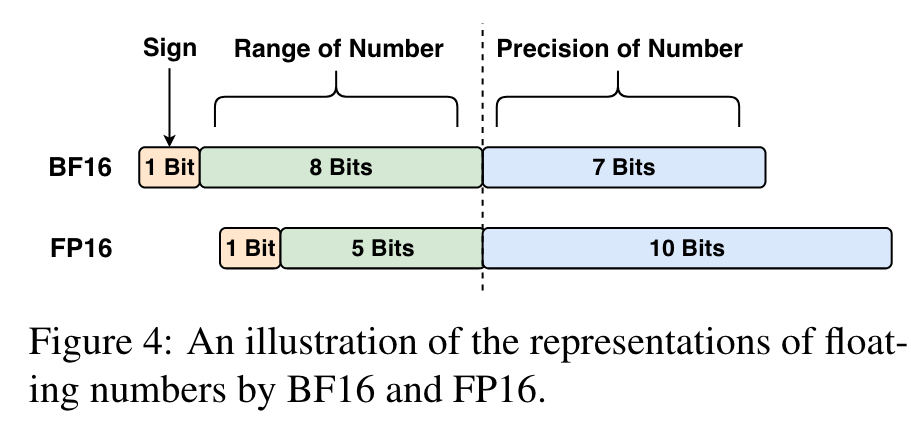

Upon analysis, we identify that the limited numerical range of FP16 leads to overflow problems, especially in operations such as softmax. As illustrated in Figure 4, in comparison to FP16, BF16 offers superior precision. Hence, for the continual pre-training of Ziya2-13B, we opt for BF16 mixed-precision training. (p. 7)

Experiment

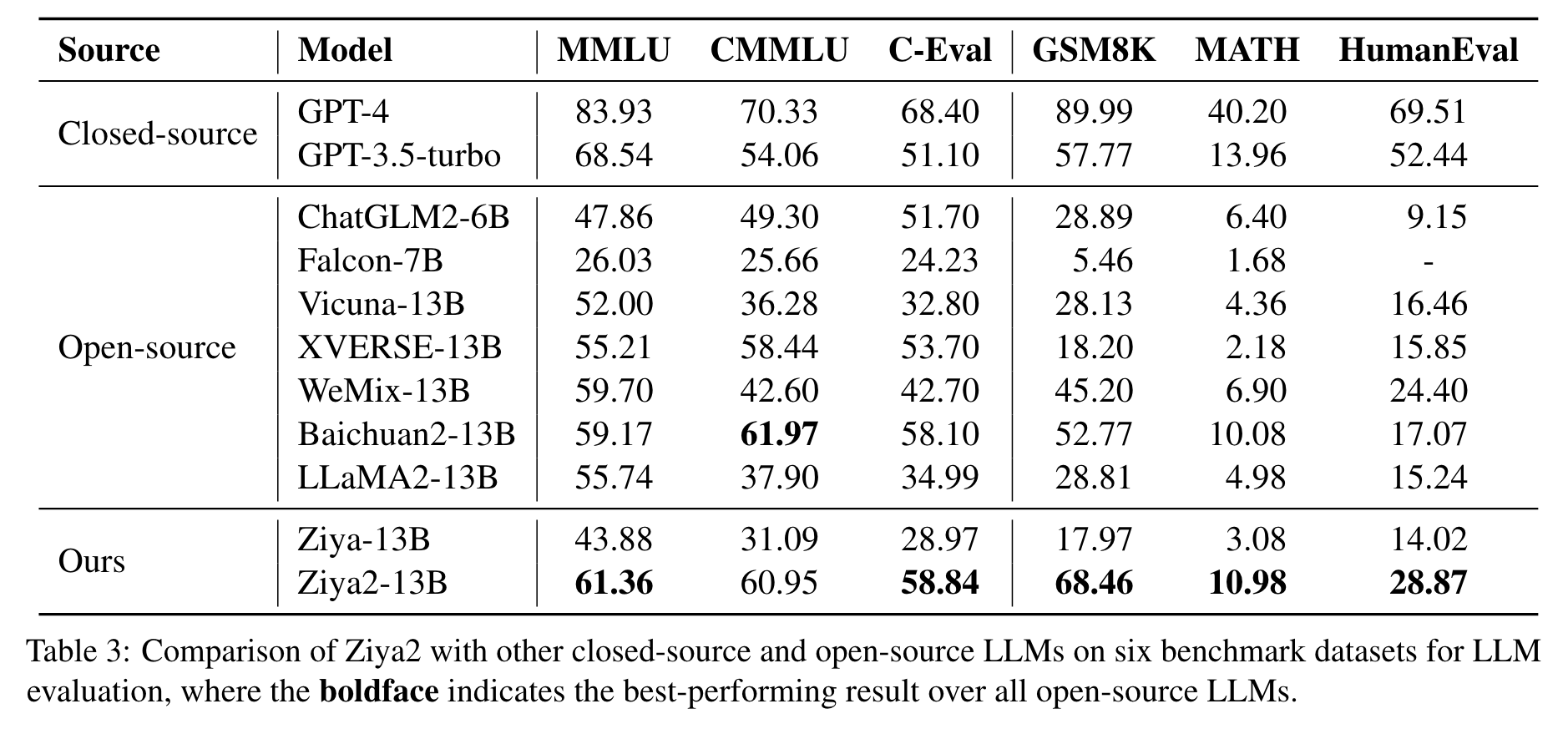

Task

- MMLU. The unique aspect of MMLU is that it tests the models’ world knowledge and problem-solving capabilities. (p. 8)

- GSM8K. The objective of this dataset is to facilitate the task of question answering on fundamental problems that necessitate reasoning through multiple steps (p. 8)

- MATH. A unique feature of this dataset is that each problem comes with a comprehensive step-bystep solution. These detailed solutions serve as a valuable resource for teaching models to generate derivation processes and explanations. (p. 8)

- HumanEval. This dataset serves as a benchmark for evaluating the ability of a system to generate functionally correct programs based on provided doc strings. (p. 8)

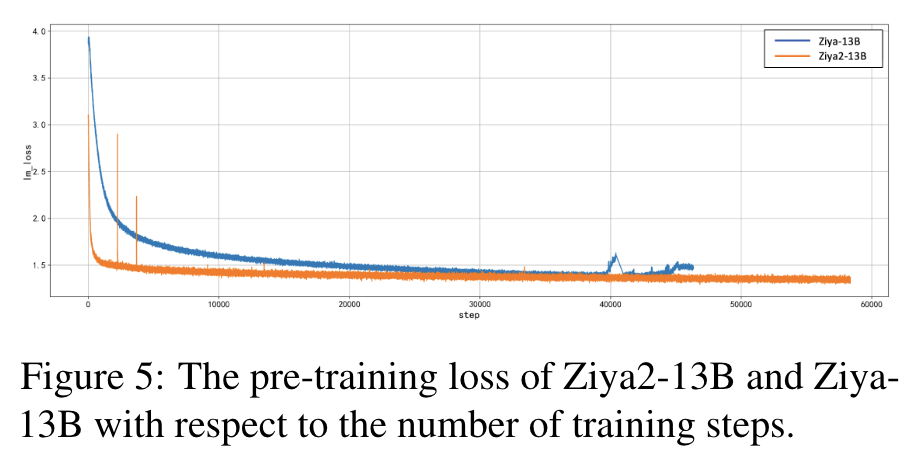

During the first training stage, initially, the performance of Ziya2 on MMLU degrades owing to the inclusion of a large amount of Chinese corpus in the training data that different from the setting of LLaMA2 (p. 10)

These results underscore the greater contribution of supervised data over unsupervised data to LLM pre-training. Therefore, employing supervised data for pre-training is able to reduce the number of training steps, thereby economizing costs. (p. 10)